I am Junha (June) Song, a Ph.D. candidate, advised by Prof. Jaegul Choo at KAIST. I previously completed my M.S. degree at KAIST under the supervision of Prof. In So Kweon. I was a visiting scholar at Carnegie Mellon University (CMU) in 2024, and a research intern at NAVER AI Lab in 2025, Lunit AI in 2023 and Qualcomm AI Research in 2022. I invite you to explore my blog, where you'll find that I am a highly self-motivated researcher. My ultimate goal is to develop AI technologies that benefit people from all walks of life, regardless of their socioeconomic background.

Research Experiences

- NAVER AI Lab

Apr 2025 - Oct 2025

AI Research Intern

Mentors: Byeongho Heo, Sangdoo Yun, and Dongyoon Han - Carnegie Mellon University

Aug 2024 - Feb 2025

Visiting Scholar in Computer Science (Korean Government Fellowship)

Collaborating with Prof. Yonatan Bisk's research group - Lunit

Jun 2023 - Dec 2023

AI Research Intern

Mentors: Tae Soo Kim, and Thijs Kooi - Qualcomm

Jul 2022 - Dec 2022

AI Research Intern

Mentor: Sungha Choi

Publications

RL makes MLLMs see better than SFT

RL makes MLLMs see better than SFT

Junha Song, Sangdoo Yun, Dongyoon Han, Jaegul Choo, and Byeongho Heo

In the International Conference on Learning Representations (ICLR), 2026.

[arxiv], [project page] MM-SeR: Multimodal Self-Refinement for Lightweight Image Captioning.

MM-SeR: Multimodal Self-Refinement for Lightweight Image Captioning.

Junha Song, Yongsik Jo, So Yeon Min, Quanting Xie, Taehwan Kim, Yonatan Bisk, and Jaegul Choo

In the arXiv, 2025

[arXiv], [project page], [code] Is user feedback always informative? Retrieval Latent Defending for Semi-Supervised Domain Adaptation without Source Data.

Is user feedback always informative? Retrieval Latent Defending for Semi-Supervised Domain Adaptation without Source Data.

Junha Song, Tae Soo Kim, Gunhee Nam, Junha Kim, Thijs Kooi, and Jaegul Choo

In the European Conference on Computer Vision (ECCV), 2024

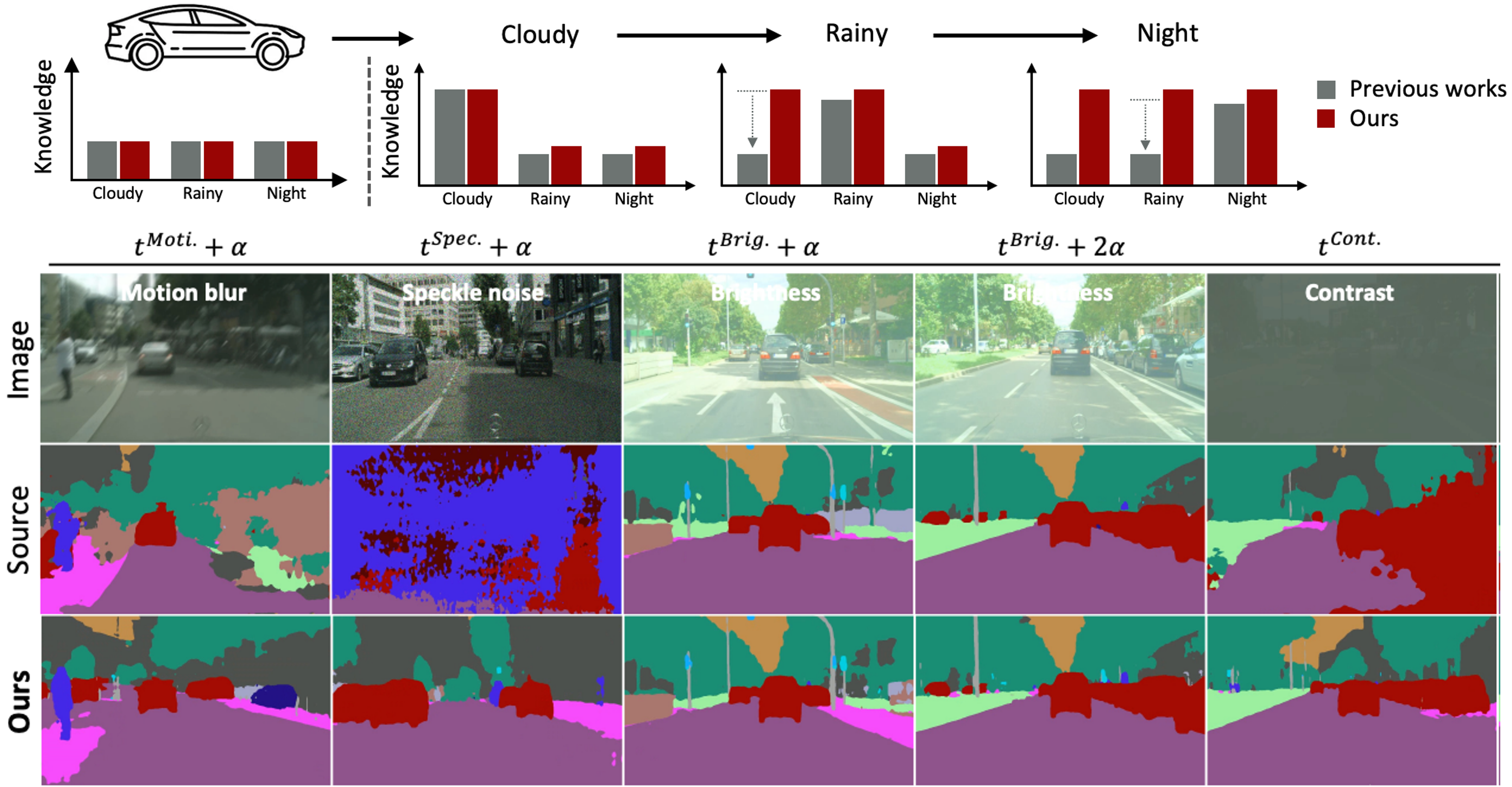

[arXiv], [project page], [code] Test-time Adaptation in the Dynamic World with Compound Domain Knowledge Management.

Test-time Adaptation in the Dynamic World with Compound Domain Knowledge Management.

Junha Song, Kwanyong Park, Inkyu Shin, Sanghyun Woo, Chaoning Zhang, and In So Kweon

In IEEE Robotics and Automation Letters (RA-L, ICRA Oral), 2024

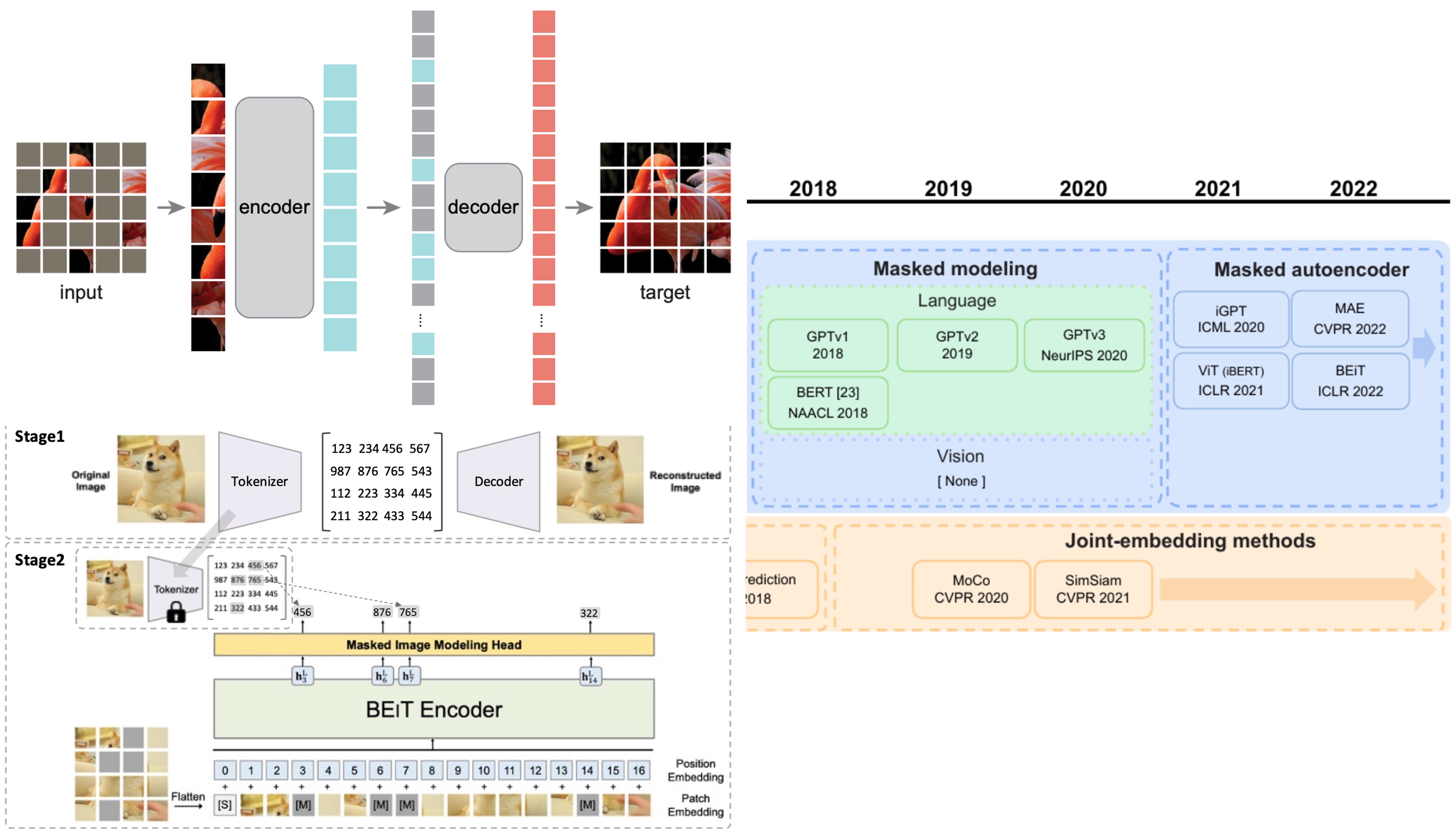

[arXiv], [IEEE], [Youtube] A Survey on Masked Autoencoder for Self-supervised Learning in Vision and Beyond.

A Survey on Masked Autoencoder for Self-supervised Learning in Vision and Beyond.

Chaoning Zhang, Chenshuang Zhang, Junha Song, John Seon Keun Yi, and In So Kweon

In the International Joint Conference on Artificial Intelligence (IJCAI), 2023.

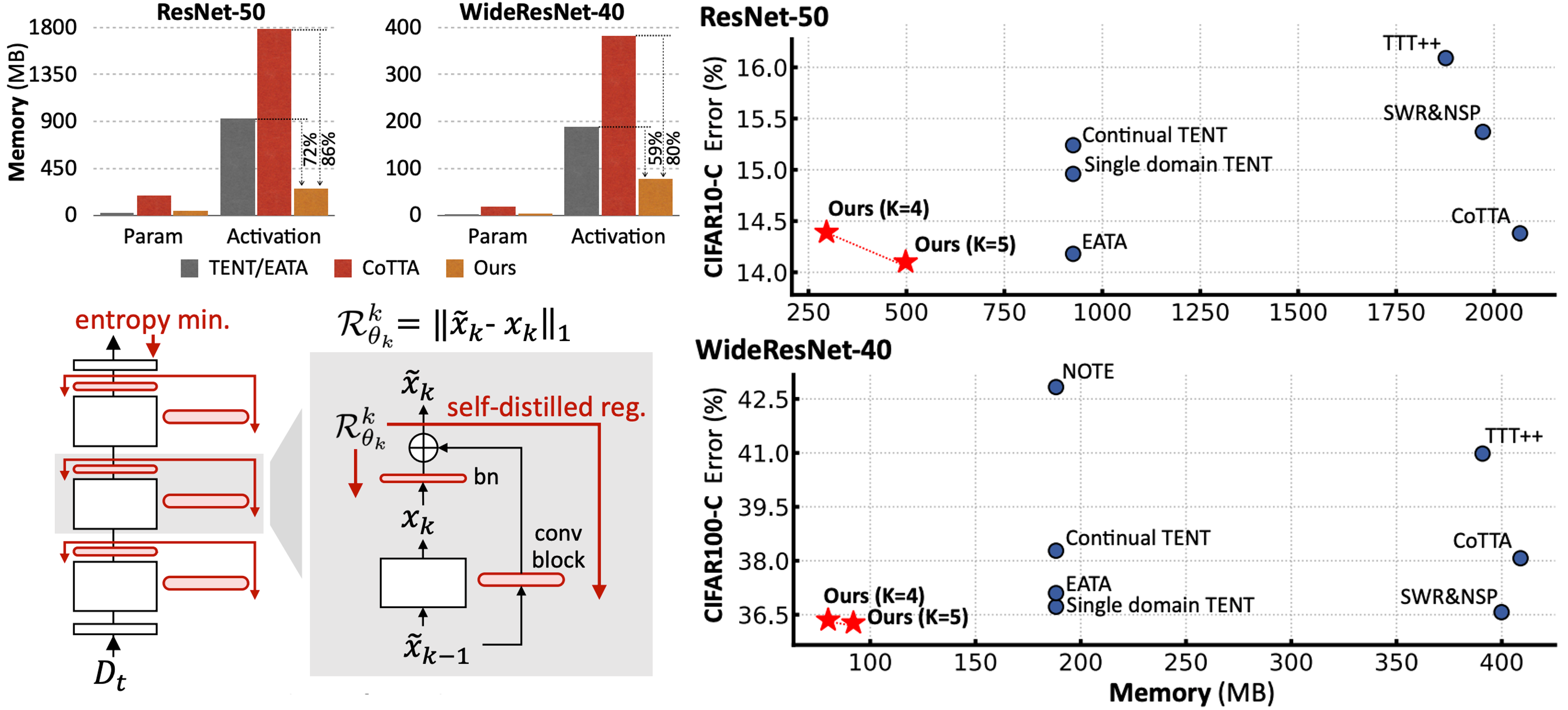

[arXiv], [slide] EcoTTA: Memory-Efficient Continual Test-time Adaptation via Self-distilled Regularization.

EcoTTA: Memory-Efficient Continual Test-time Adaptation via Self-distilled Regularization.

Junha Song, Jungsoo Lee, In So Kweon, and Sungha Choi

In the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

[arXiv], [project page]

Patents

- Test-time adaptation via self-distilled regularization

Junha Song and Sungha Choi. 15 May, 2024. [U.S. Patent No. US20240160926A1]

Research Interests

- Human-Like Perception in Multimodal AI Systems

- While multimodal large language models (MLLMs) have demonstrated remarkable progress in aligning vision and language, their visual perception remains far from human-level understanding. I am particularly interested in enhancing these models’ perceptual accuracy through techniques such as reinforcement learning (arXiv'25) and self-corrective adaptation (arXiv'25). Building upon this, my research explores how MLLMs can perform human-like visual reasoning — imagining future states and understanding complex visual contexts. Ultimately, I aim to develop deployable multimodal systems that bring these capabilities to real-world applications, expanding the accessibility and societal impact of AI.

- On-device adaptation frameworks

- Despite advances in deep learning, the AI model often struggles with performance degradation due to environmental changes. For example, the cognitive ability of self-driving cars can change depending on time, weather, and city-state. To address this, I am fascinated with adaptation techniques, such as adaptation with user-provided feedback (ECCV'24) and test-time adaptation (CVPR'23 and (ICRA'23). I believe this technique would be key to ensuring robust performance of the model and ultimately building reliable AI applications.

Education

- Korea Advanced Institute of Science and Technology (KAIST)

Aug 2023 - Present

Ph.D student in Graduate School of AI

Advisor: Prof. Jaegul Choo - Korea Advanced Institute of Science and Technology (KAIST)

Feb 2021 - Feb 2023

M.S. degree in the Division of Future Vehicle

Advisor: Prof. In So Kweon

Grade: 3.9 / 4.3 (Percent: 95.56/100) - Kookmin University (Seoul, South Korea)

Feb 2015 - Feb 2021

B.S. degree in IT and Automobile Engineering

Grade: 4.39 / 4.5 (Rank: 1/121 | Percent: 98.7/100 | Major: 4.43)

National Science and Engineering Scholarship (Full tuition) from Korea Student Aid Foundation

Mandatory Military Service for 21 Months

Awards and Honors

- Intensive 2-Week Guest Lecturer, Introduction to Deep Learning, LG Innotek (2024)

- Best Master's Thesis Award, Korea Advanced Institute of Science and Technology (KAIST) (2023)

- Lecture planning consultant, Fast Campus (2022)

- Industry-University Scholarship (Full tuition support for M.S. program), Hyundai Mobis (2021–2022)

- National Science and Engineering Scholarship (Full tuition support for B.S. program), Korea Scholarship Foundation (2019–2021)

- Future Transport Design Award and Honorable Judge Award, 'Vehicle monitoring over internet toward digital twins', Cloud Programming World Cup, Japan (2019)

- Capstone Awards, Korean Society of Automotive Engineers (2019)

Projects

- Development of real-time masking/unmasking system for personal video information for public services such as CCTV (article), Korea Ministry of Science and ICT (2021 - 2023)

- Development of segmentation networks robust to environment variance, Hyundai Mobis (2021)

- Satellite image precision object detection, Korea Agency for Defense Development (ADD) (2020)

- Detection of Surrounding Vehicles using Deep Neural Network and Fusion of Panoramic Camera and Lidar Sensor, Korea Foundation for the Advancement of Science and Creativity (KORAC), Korea (2019)

B.S. Research Experiences

- "Style Transfer Maps from Satellite Images by using Generative Model", Korean Institute of Communications and Information Science (KICS) (2020)

- "Improvement of LiDAR and IMU-based autonomous driving performance in right-angle corner situations", Korean Sociey of Automotive Engineers (2019)

- Research Intern at Machine Intelligence Lab, Kookmin University (Dec 2019 - Oct 2020)

- Research Intern at Intelligence and Interaction Lab, Kookmin University (Feb 2019 - Nov 2019)

Skills

- Programming language: Python, C++

- Machine Learning Librarie: Pytorch, Tensorflow

- Application development: Robot Operating System (ROS)

- Sensor utilization: Camera, RGB-D Camera, LiDAR, GPS/IMU