[FL] Federated learning papers 1

FL_papers_1

1. FedMatch: Federated Semi-Supervised Learning with Inter-Client Consistency & Disjoint Learning_ICLR21

- Overview: [global, locals SemiSL] (T) semi-supervised clients are trained with pseudo labels generated by (M) helper models. The global performance of the average of client models is reported.

- Introduction

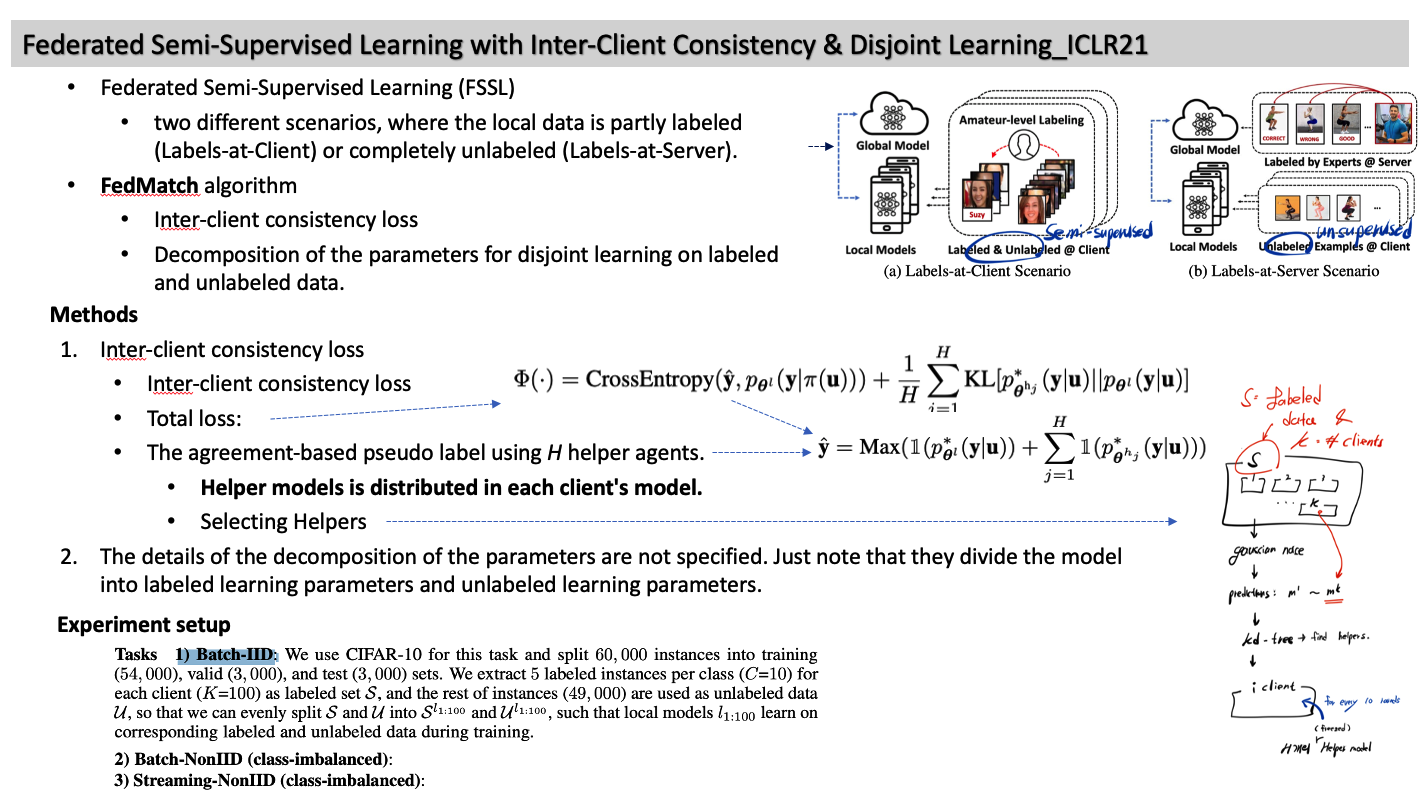

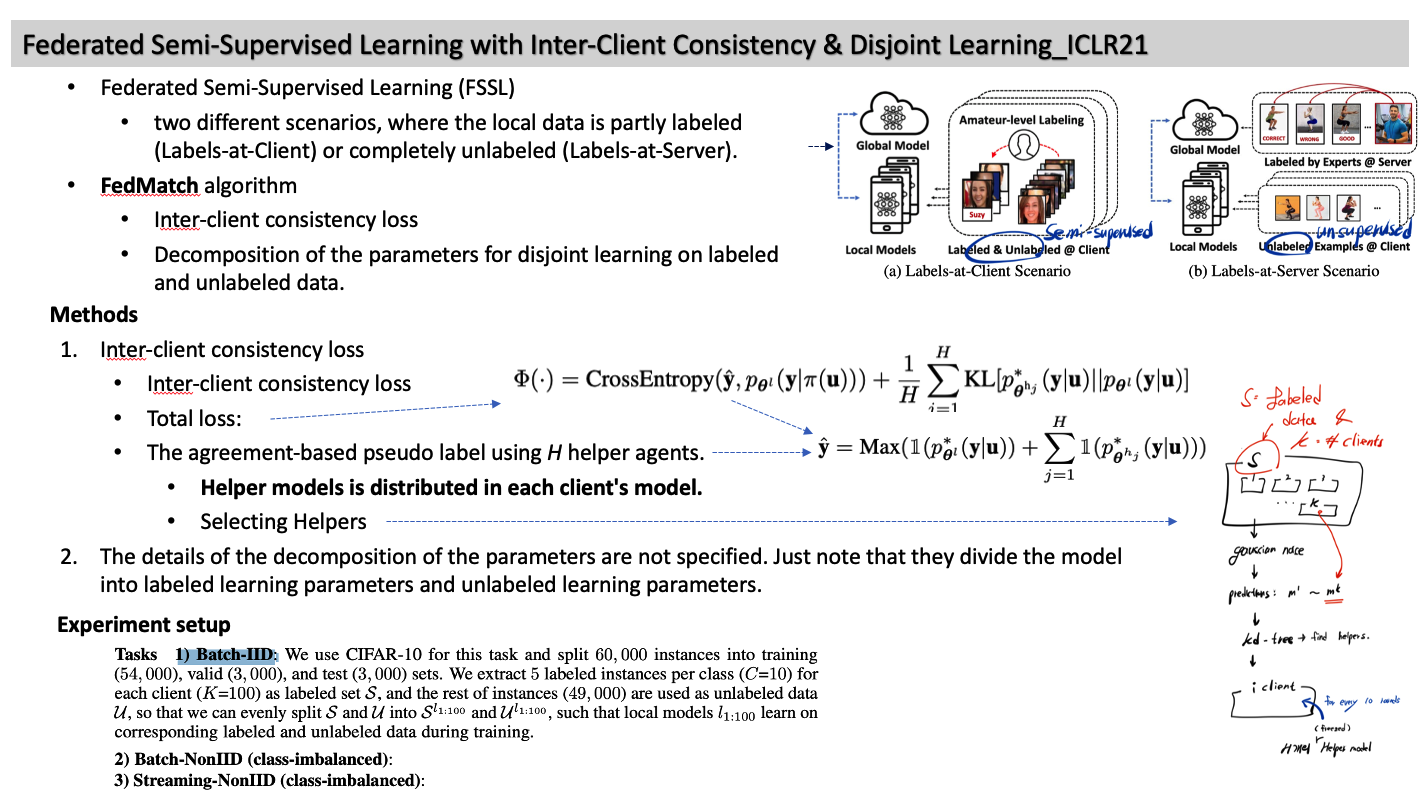

- Federated Semi-Supervised Learning (FSSL)

- two different scenarios, where the local data is partly labeled (Labels-at-Client) or completely unlabeled (Labels-at-Server).

- FedMatch algorithm

- Inter-client consistency loss

- Decomposition of the parameters for disjoint learning on labeled and unlabeled data.

- Method

- Inter-client consistency loss

- Inter-client consistency loss

- Total loss:

- The agreement-based pseudo label using H helper agents.

- Helper models are distributed in each client's model.

- Selecting Helpers

- The details of the decomposition of the parameters are not specified. Just note that they divide the model into labeled learning parameters and unlabeled learning parameters.

2. RobustHFL: Robust Federated Learning with Noisy and Heterogeneous Clients CVPR22

- Overview: [local, locals SL] (T) model heterogeneous federated learning with client’s noisy labels using (M) reliability-based collaborated learning with KD loss btw clients.

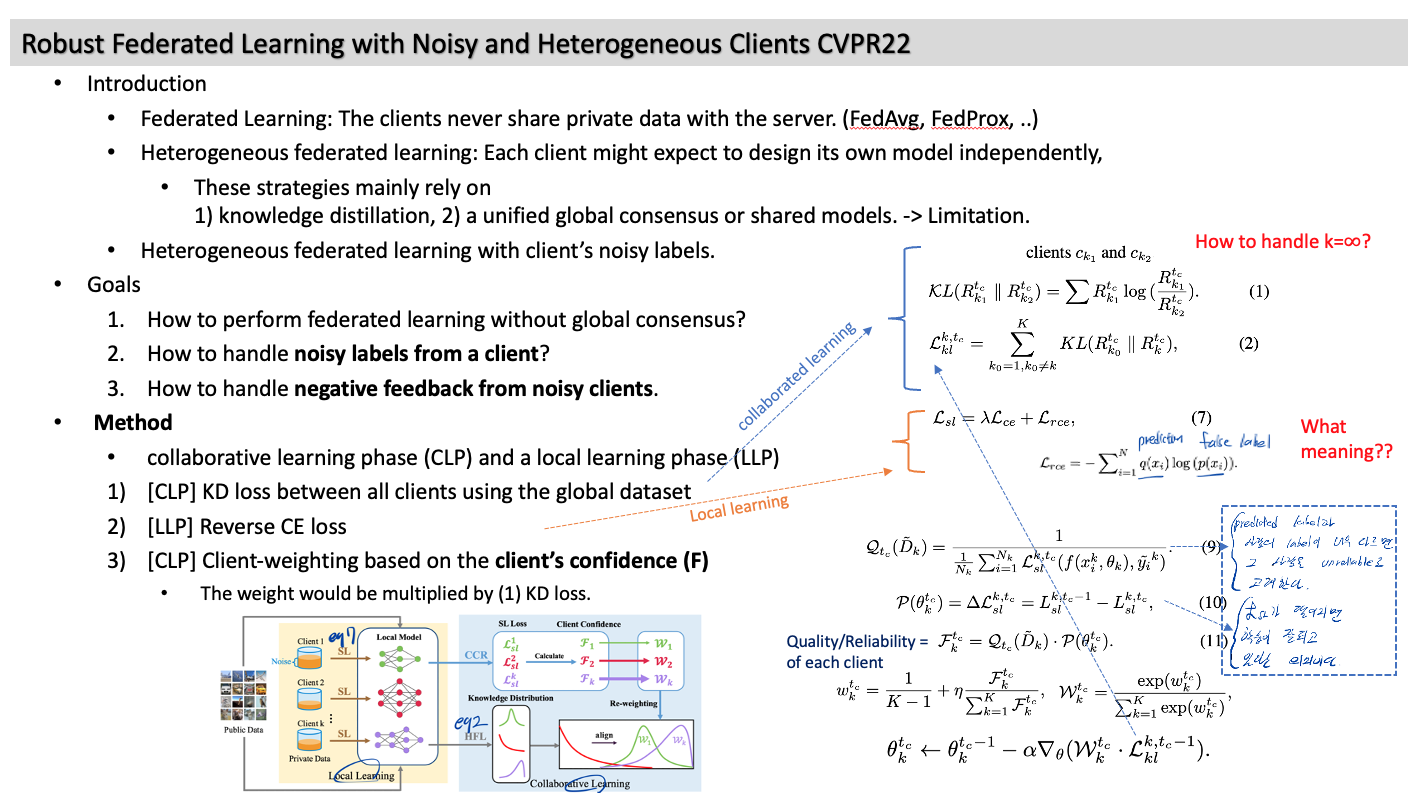

- Introduction

- Federated Learning: The clients never share private data with the server. (FedAvg, FedProx, ..)

- Heterogeneous federated learning: Each client might expect to design its own model independently,

- These strategies mainly rely on 1) knowledge distillation, 2) a unified global consensus or shared models. -> Limitation.

- Heterogeneous federated learning with client’s noisy labels.

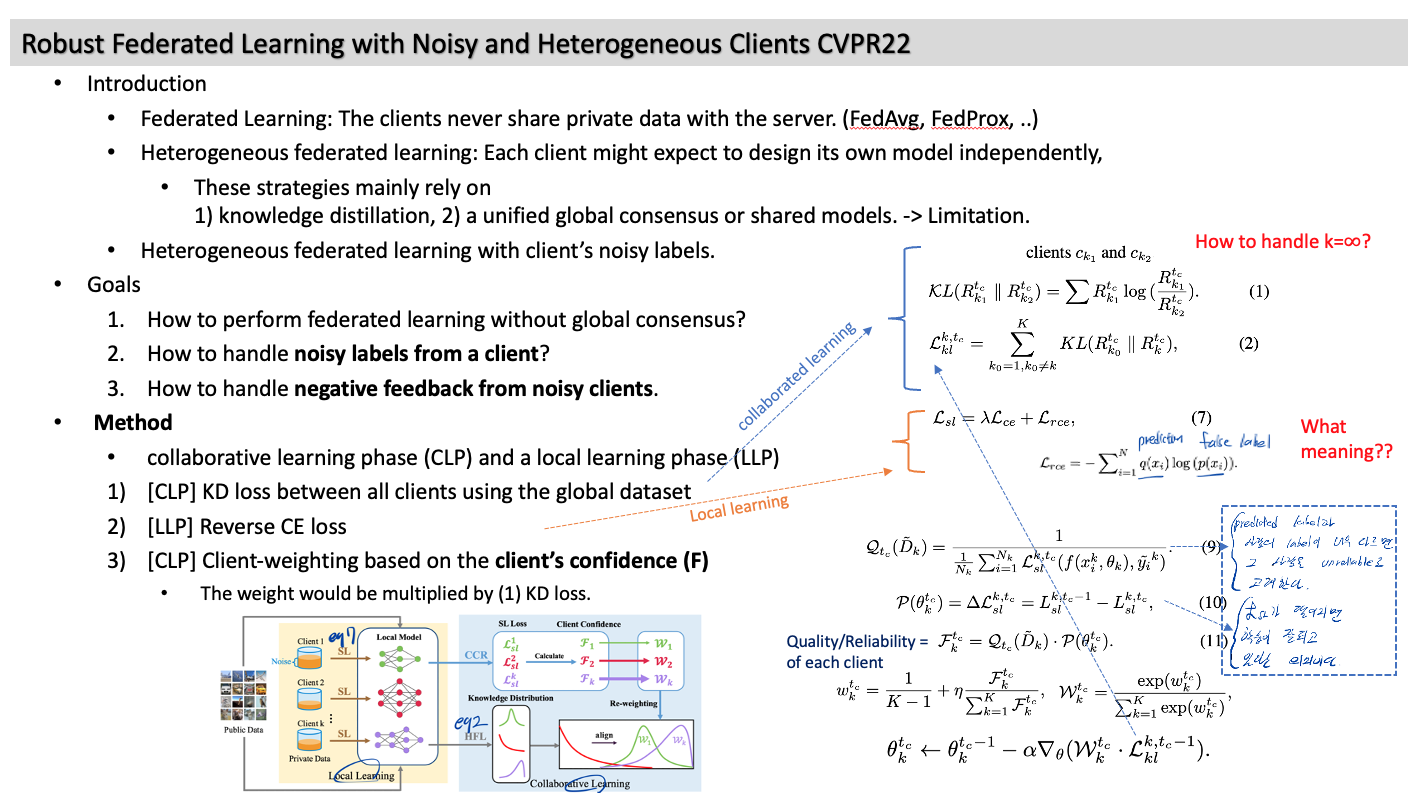

- Goals

- How to perform federated learning without global consensus?

- How to handle noisy labels from a client?

- How to handle negative feedback from noisy clients.

- Method

- collaborative learning phase (CLP) and a local learning phase (LLP)

- [CLP] KD loss between all clients using the global dataset

- [LLP] Reverse CE loss

- [CLP] Client-weighting based on the client’s confidence (F)

- The weight would be multiplied by (1) KD loss.

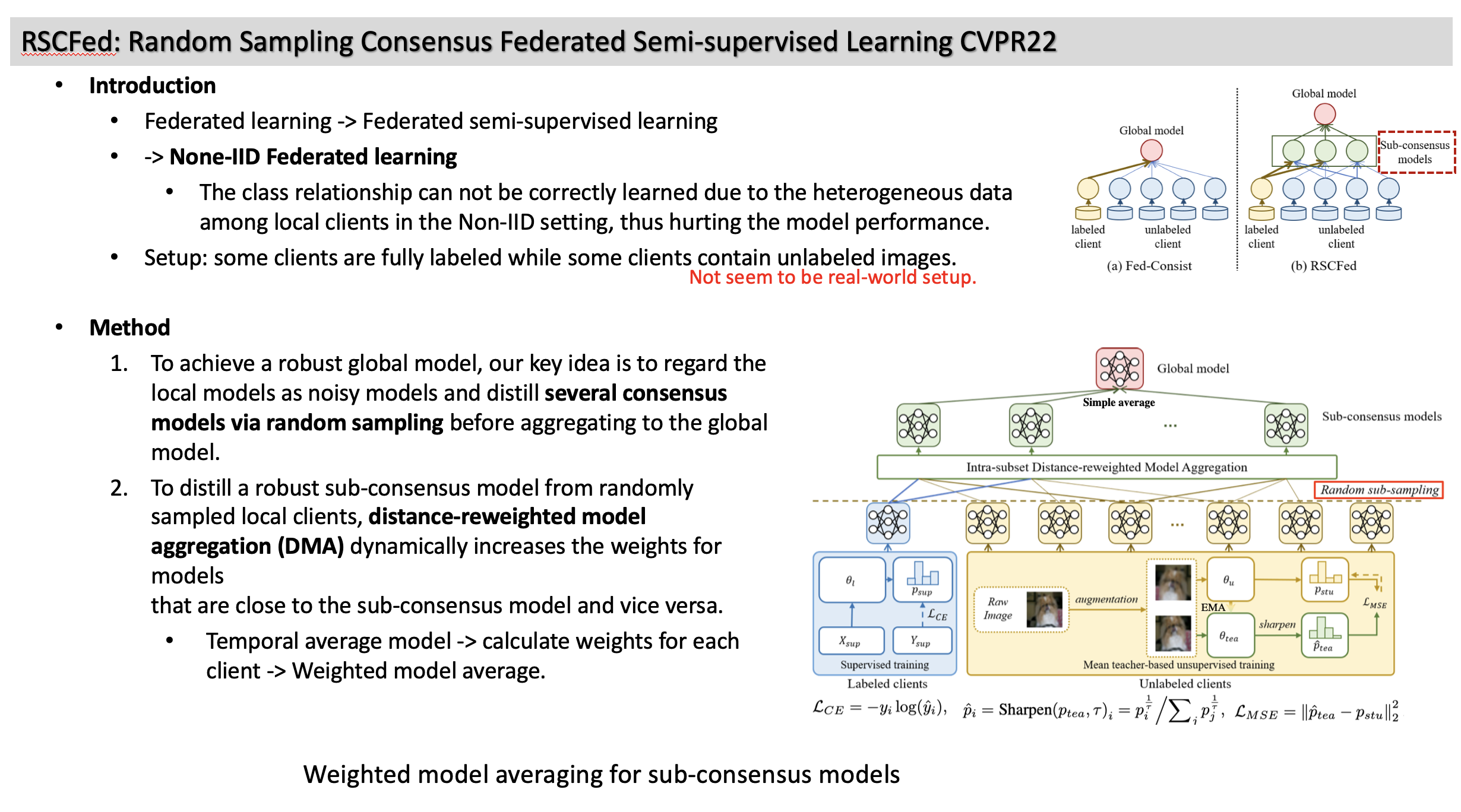

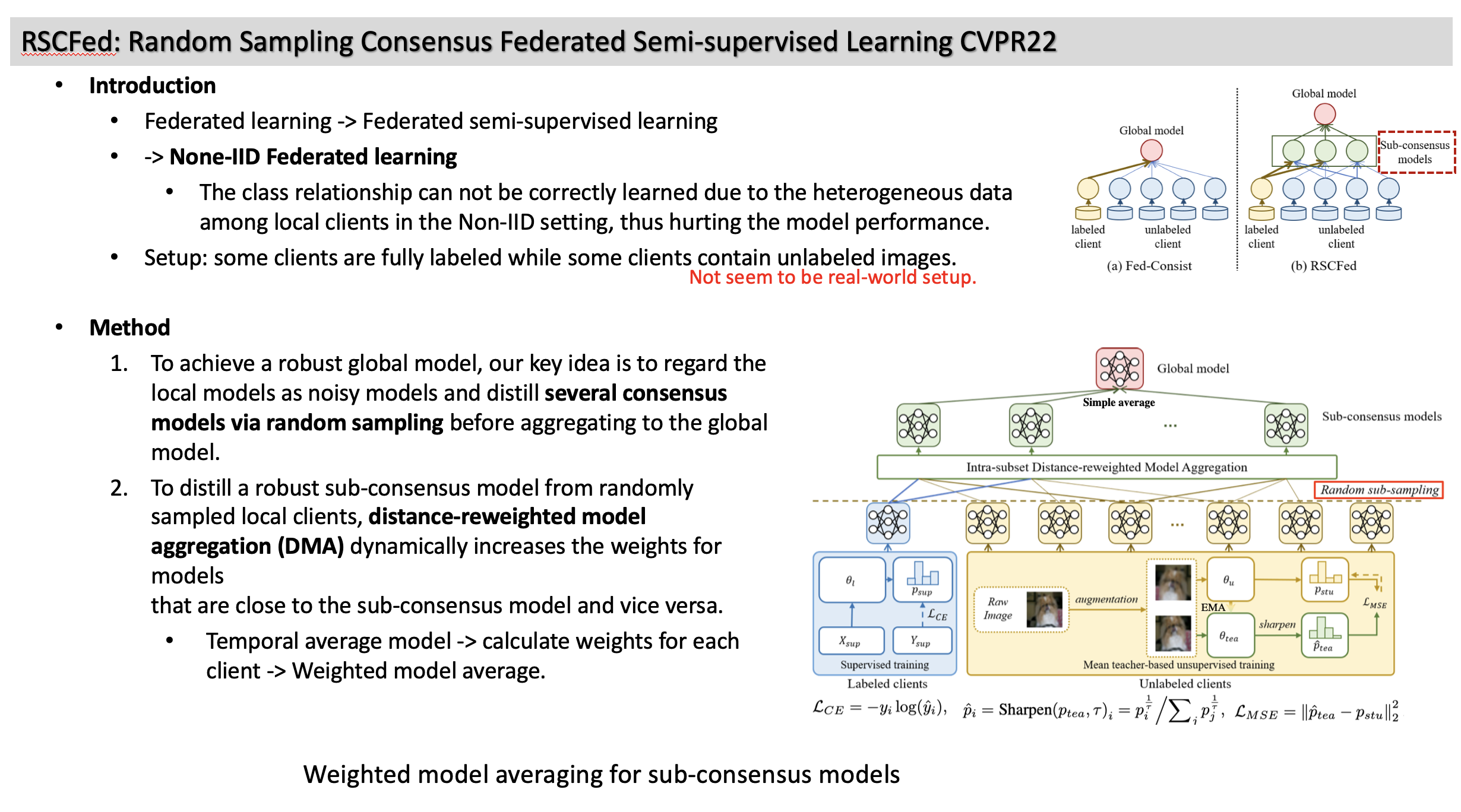

3. RSCFed: Random Sampling Consensus Federated Semi-supervised Learning CVPR22

- Overview: [global, local SL+ locals SelfSL] (M) weighted model averaging for sub-consensus models for (T) none-iid federated semi-supervised learning.

- Introduction

- Federated Learning: The clients never share private data with the server. (FedAvg, FedProx, ..)

- Heterogeneous federated learning: Each client might expect to design its own model independently,

- These strategies mainly rely on 1) knowledge distillation, 2) a unified global consensus or shared models. -> Limitation.

- Heterogeneous federated learning with client’s noisy labels.

- Goals

- How to perform federated learning without global consensus?

- How to handle noisy labels from a client?

- How to handle negative feedback from noisy clients.

- Method

- collaborative learning phase (CLP) and a local learning phase (LLP)

- [CLP] KD loss between all clients using the global dataset

- [LLP] Reverse CE loss

- [CLP] Client-weighting based on the client’s confidence (F): The weight would be multiplied by (1) KD loss.

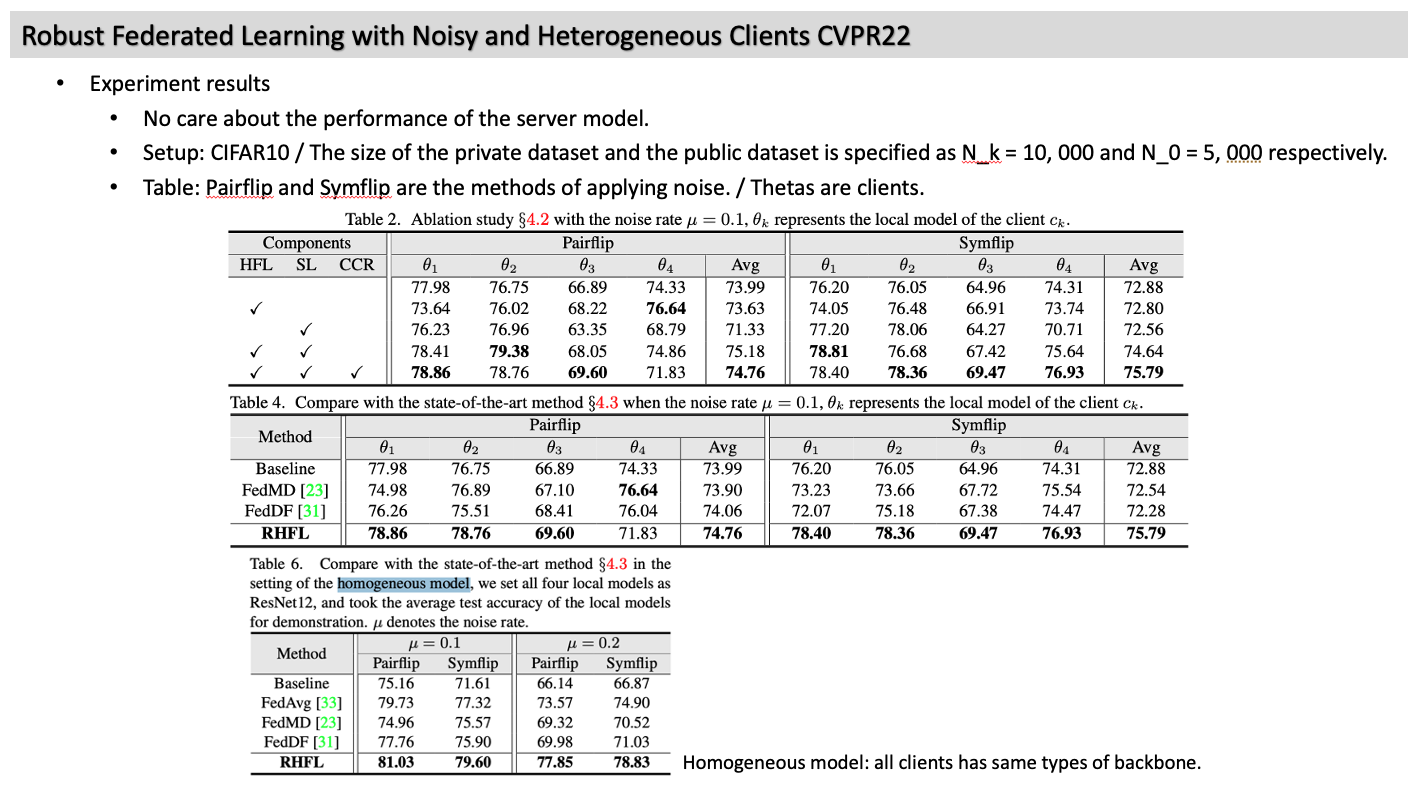

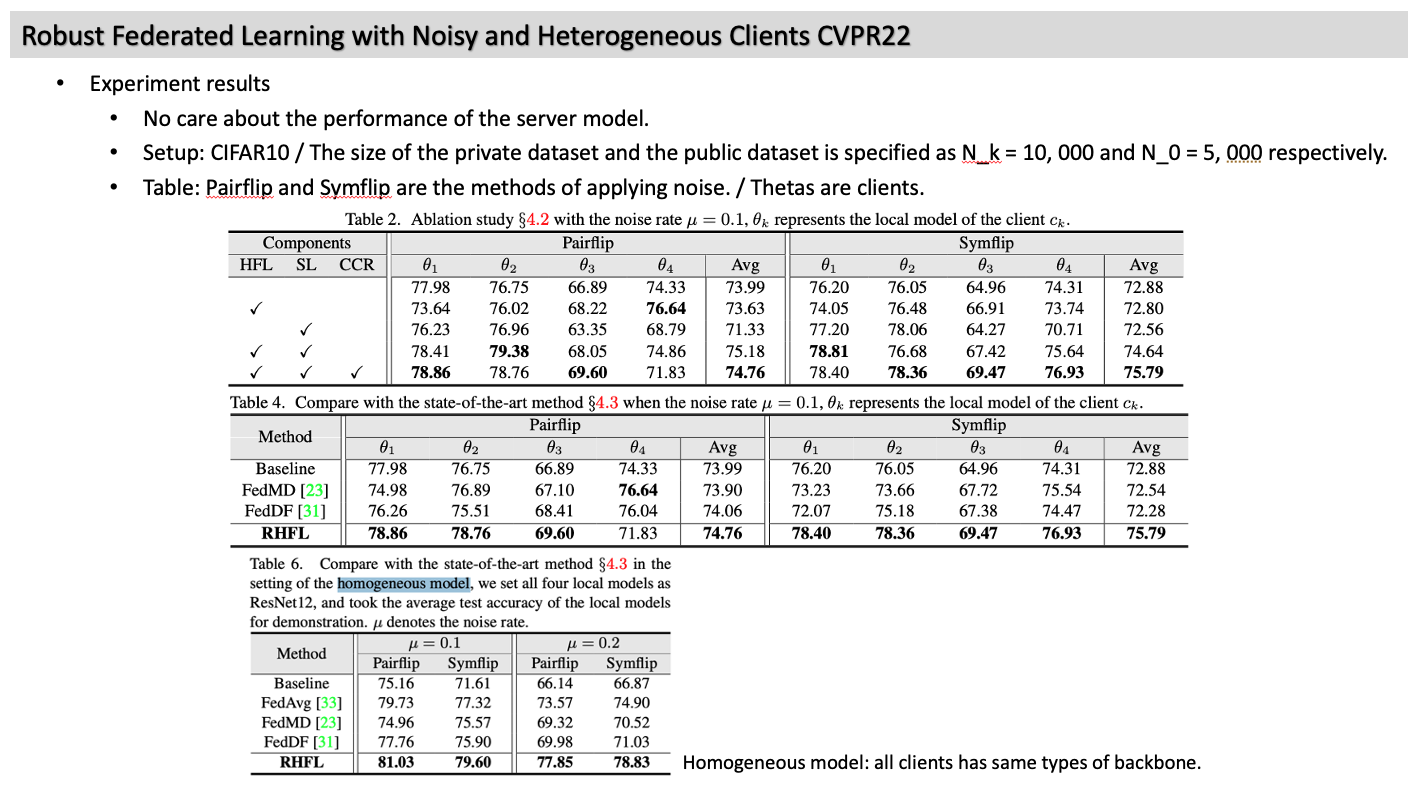

- Experiment results

- No care about the performance of the server model.

- Setup: CIFAR10 / The size of the private dataset and the public dataset is specified as N_k = 10, 000 and N_0 = 5, 000 respectively.

- Table: Pairflip and Symflip are the methods of applying noise. / Thetas are clients.

4. FCCL: Learn From Others and Be Yourself in Heterogeneous Federated Learning CVPR22

- Overview: [local, locals SL] (T) model + data (domain shift btw clients) heterogeneous federated learning considering catastrophic forgetting in clients. (M) Self-sup learning by Barlow Twins & Continual learning by know. Distillation.

- Introduction

- Model heterogeneity problem + Data heterogeneity problem (= domain shift btw clients) -> How to learn a generalizable representation ability of clients? (intra)

- How to prevent catastrophic forgetting in clients? (inter = source knowledge)

- Method

- Federated Cross-correlation learning (FCCL)

- They encourage the invariance of same dimensions and the diversity of different dimensions.

- Federated continual learning (FCL)

- involves the knowledge learned from other participants due to collaborated learning of FCCL.