[FL] Federated learning papers 2 (+Domain Adaptation)

FL_papers_2

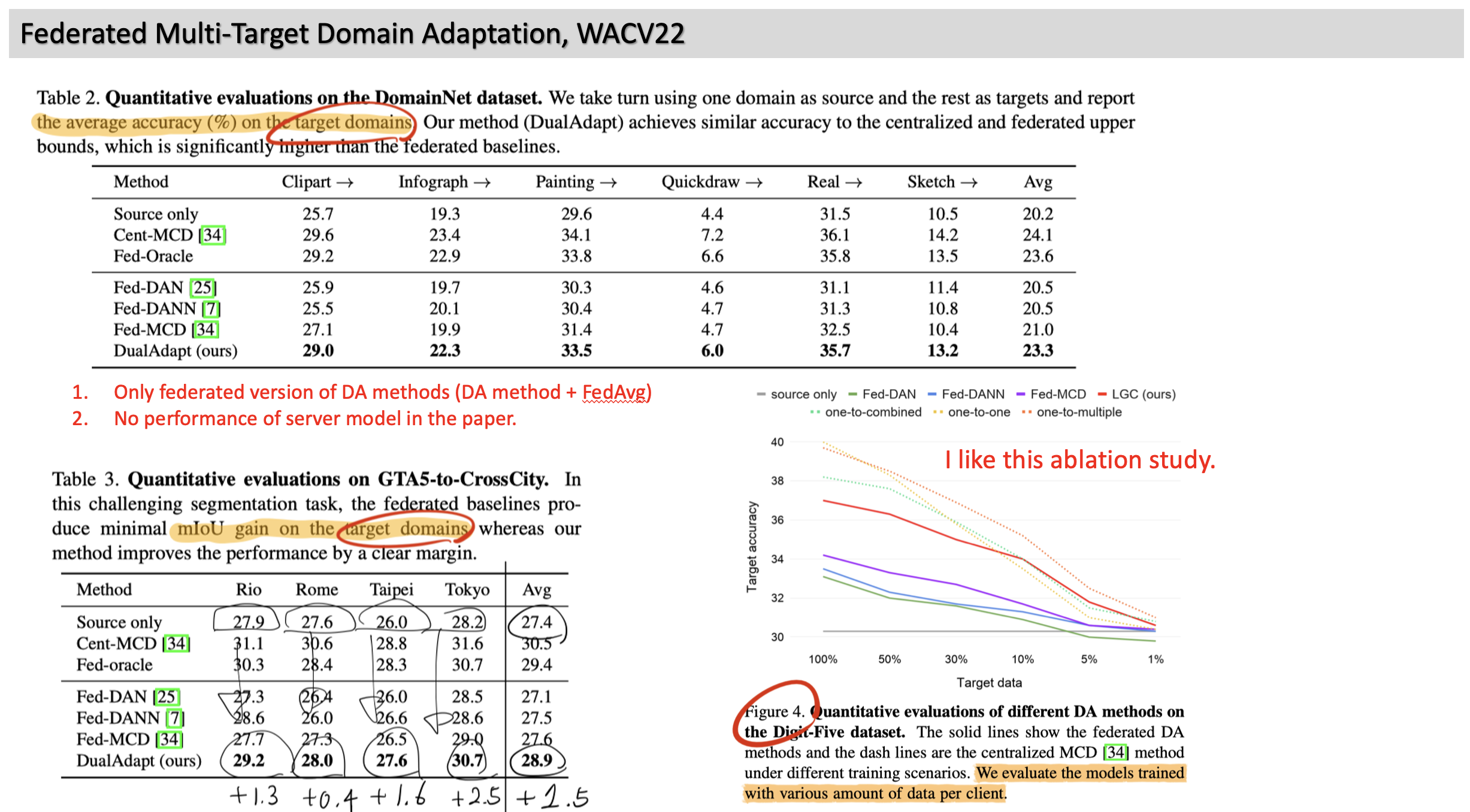

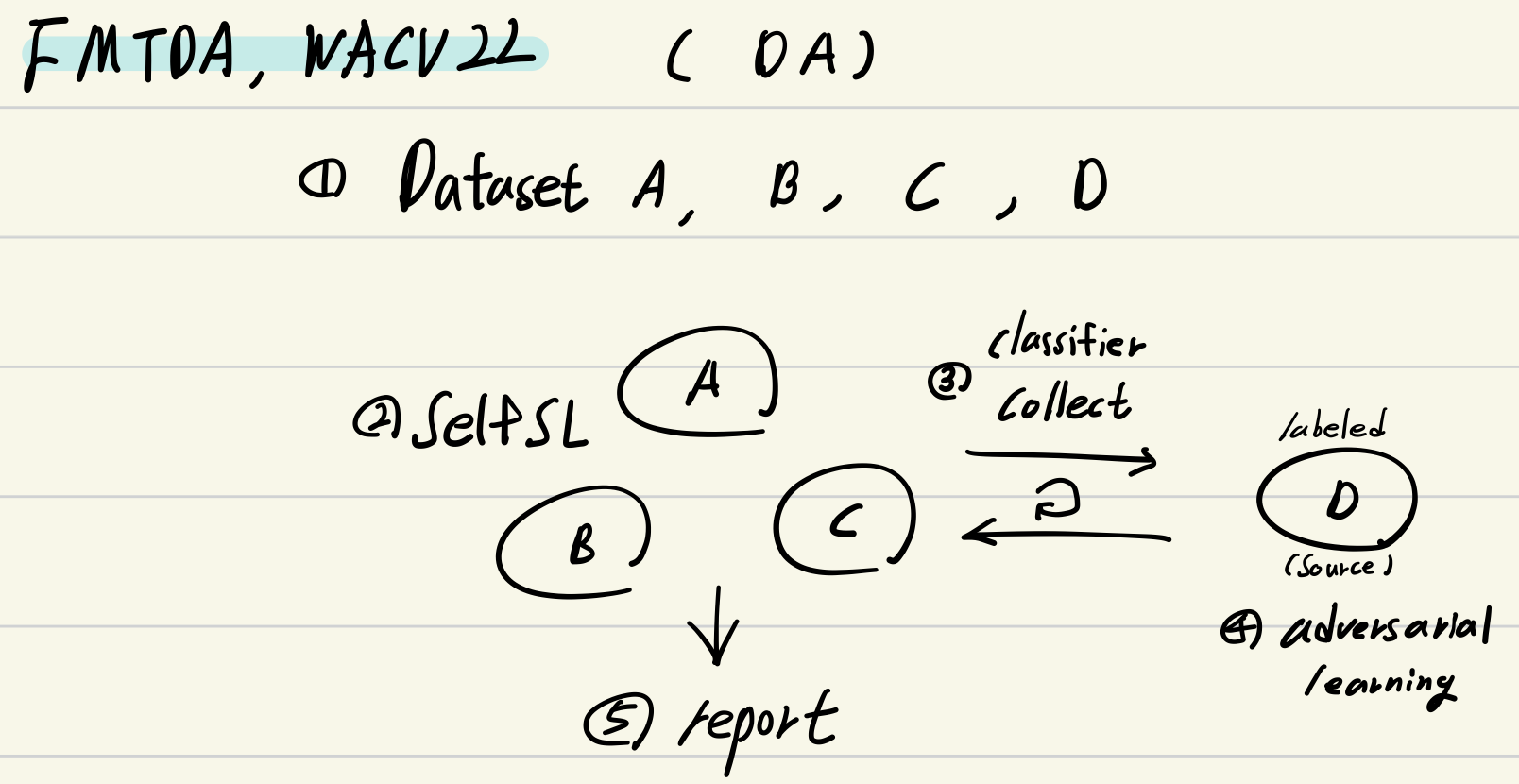

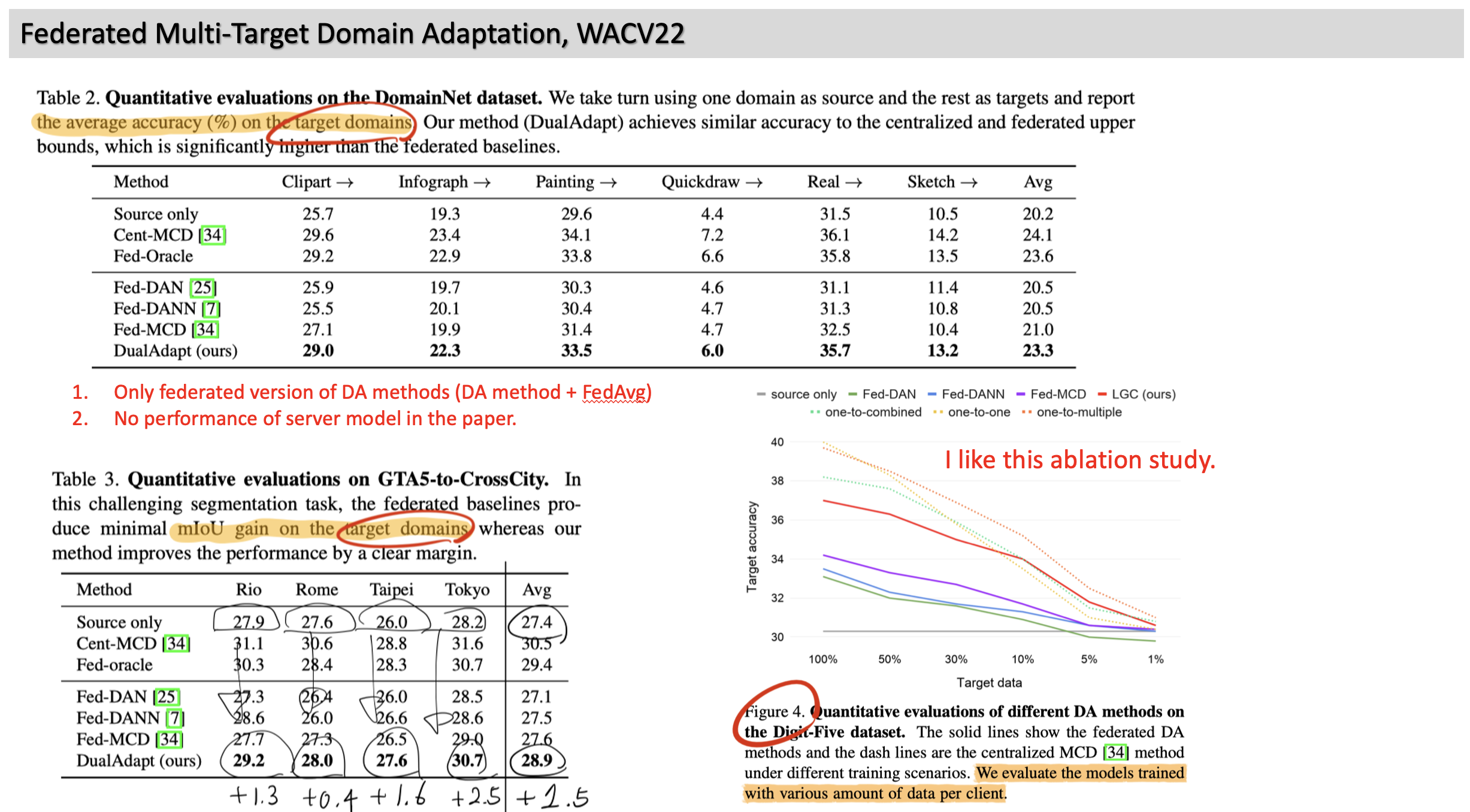

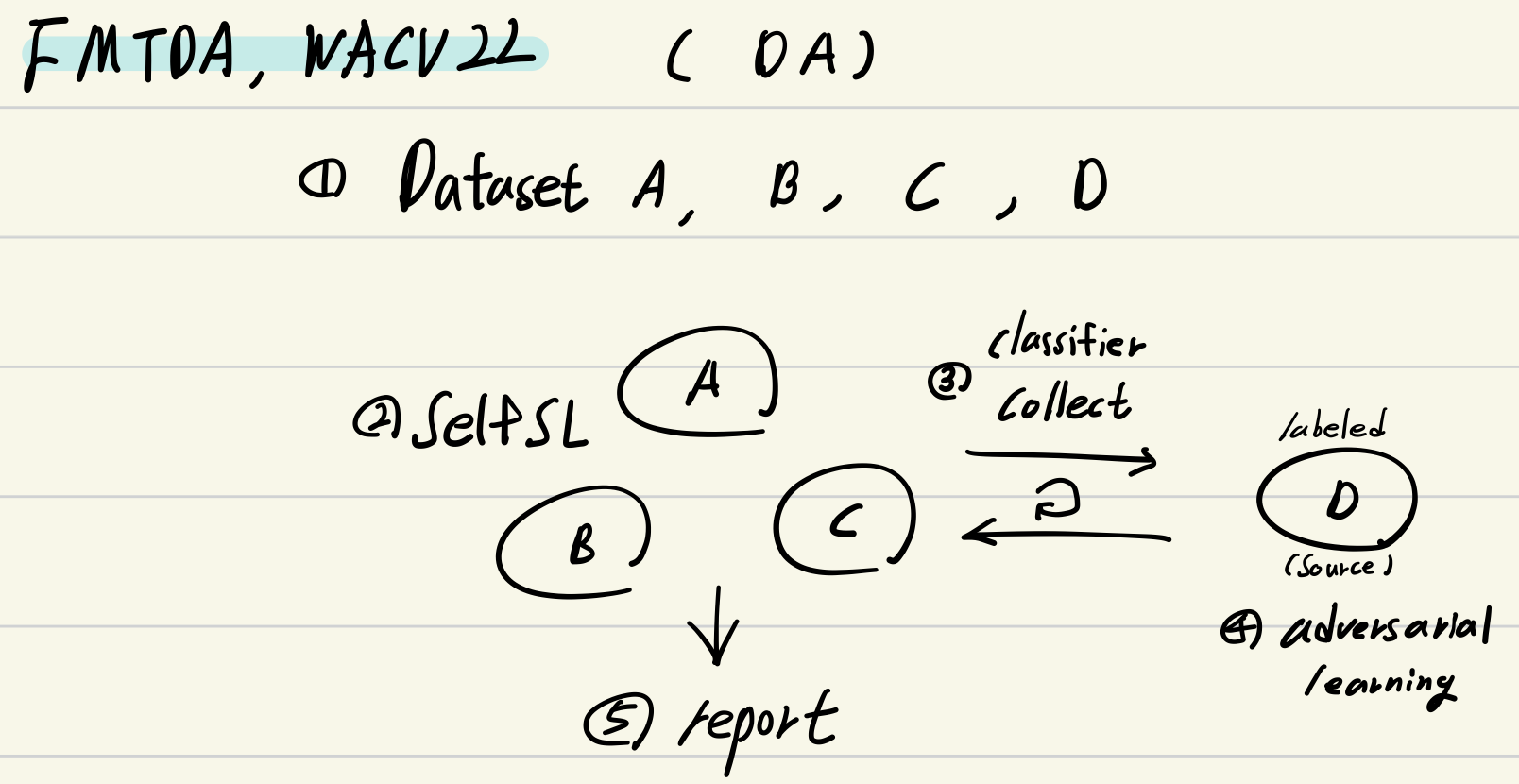

1. Federated Multi-Target Domain Adaptation, WACV22

- Overview: [Local, locals SelfSL] (T) Unlabeled client datasets & Public data only available in the server (M) Pseudo label & Maximum classifier discrepancy.

- Introduction

- New task: FMTDA (Multi-target = data heterogeneity): All client data is unlabeled, and a centralized labeled dataset is only available on the server

- Challenges of FL + DA

- Unlabeled dataset in clients

- Domain shift between clients

- Communication overhead

- Method

- Adversarial learning using MCD, (2) and (5) in fig. ->

- Upload only parameters of a classifier.

- Reference & Details: https://junha1125.github.io/blog/artificial-intelligence/2021-10-25-DGpapers6/#67-federated-multi-target-domain-adaptation

2. Client-agnostic Learning and Zero-shot Adaptation for Federated Domain Generalization, ICLR23 submitted

- Overview: [Global, locals SL] (T) Training local models with DG algorithms using (M) mix-style global and local statistics & alpha-BN during testing.

- Link (https://openreview.net/forum?id=S4PGxCIbznF)

- Introduction

- Federated domain generalization aims to generalize the model to new clients.

- (1) FedAvg → (2) FL with non-iid data (=heterogeneous label distribution) → (3) domain shift btw locals

- Previous (3) works train local clients without DG algorithms.

- Method

- Client-agnostic learning with mixed statistics.

- Randomly interpolating instance and global statistics in BN. (only update BN)

- Zero-shot adapter

- alphaBN using an alpha generator named zero-shot adapter.

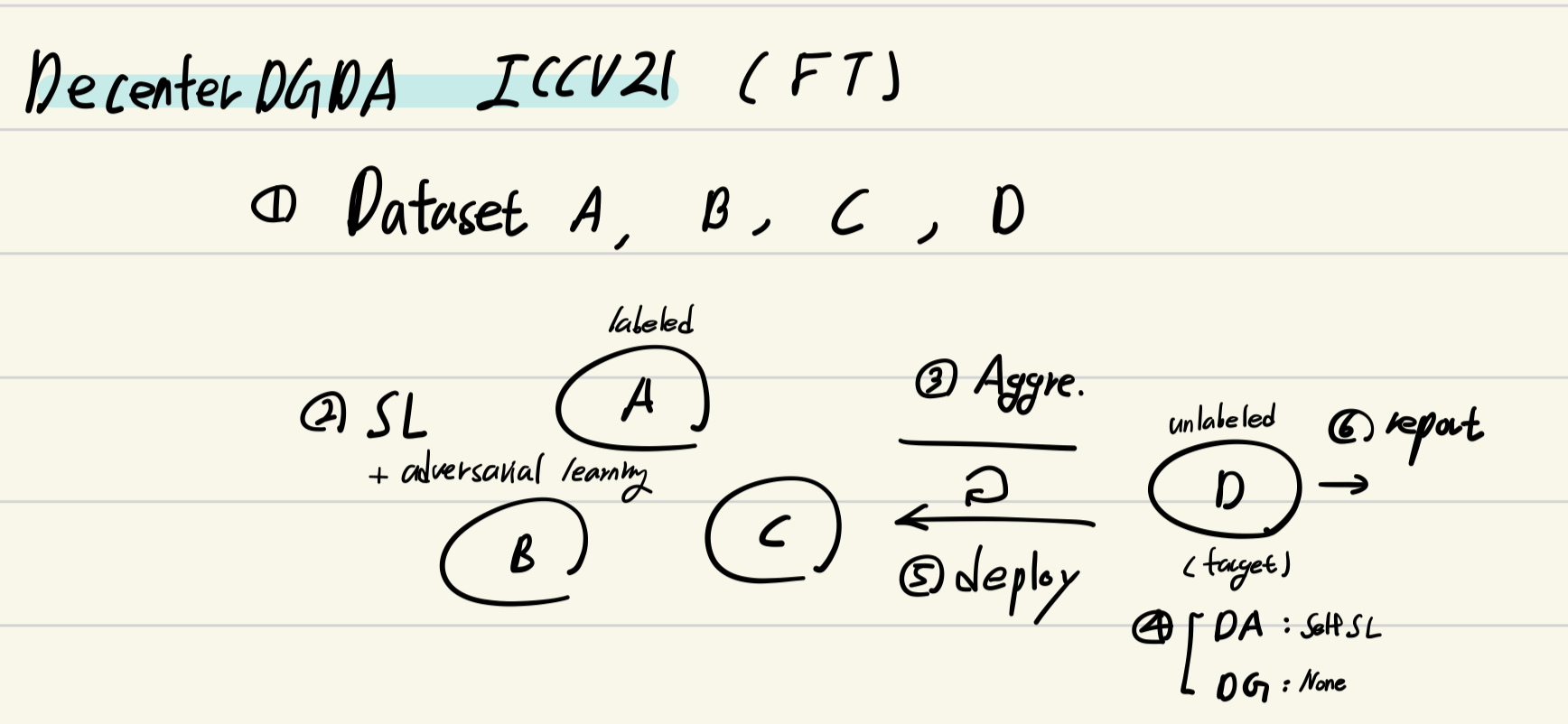

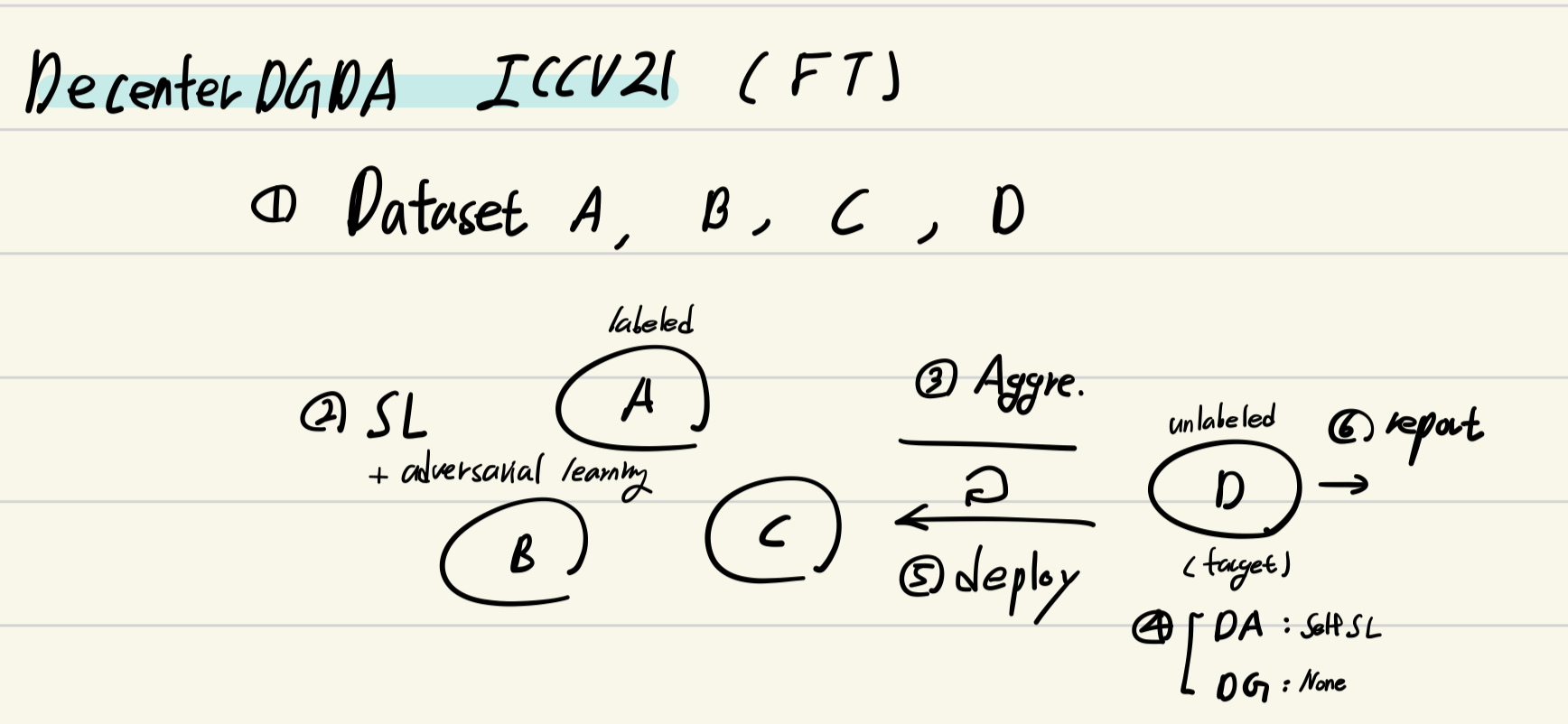

3. Collaborative Optimization and Aggregation for Decentralized Domain Generalization and Adaptation ICCV21

- Overview: [Global, locals SL+ global SelfSL] (T) decentralized DG and UDA (M) collaborated learning with local's classifiers, agreement-based weighted aggregation

- Introduction

- Centralized DG and UDA (Issue 1) data privacy. (Issue 2) shared one model, not ’local models and a global model.’

- Diff from FedAvg: (1) Selective central aggregation (2) Multiple source datasets with domain shift.

- Proposed Task: we study the problems of decentralized DG and UDA, which aim at optimizing a generalized target model via decentralized learning with non-shared data from multiple source domains.

- Method

- Local Model Collaborative Optimization

- CE loss + 2. hybrid IN&BN (Resolve domain shift) + 3. Collaboration of frozen Classifiers (Generalization of a backbone)

- Global Model Optimization and Aggregation

- Parameter aggregation based on multi-agreement (Construct global model + further protect attack) + 2. Same loss as above)

- Concerns

- Lack of validity for source domains (clients) to participate in federated learning.

- Labeled clients (source) / Unlabeled server (target) -> Awkward

- How to handle Infinite clients?

- Updating the local model with the global model can negatively impact performance in local domains.

- Experiment results ->> Domain Generalization (The model initialized on ImageNet pre-trained model) := Fine-tunning task

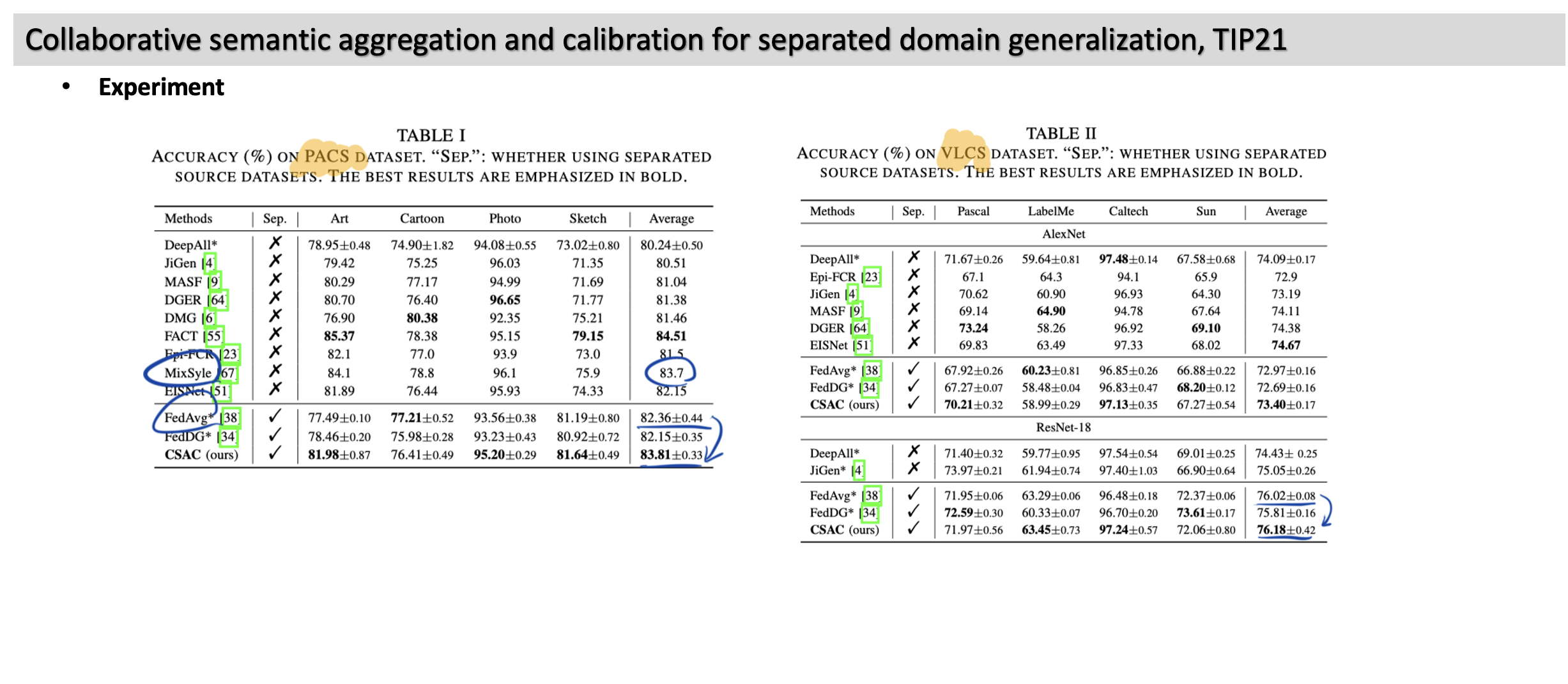

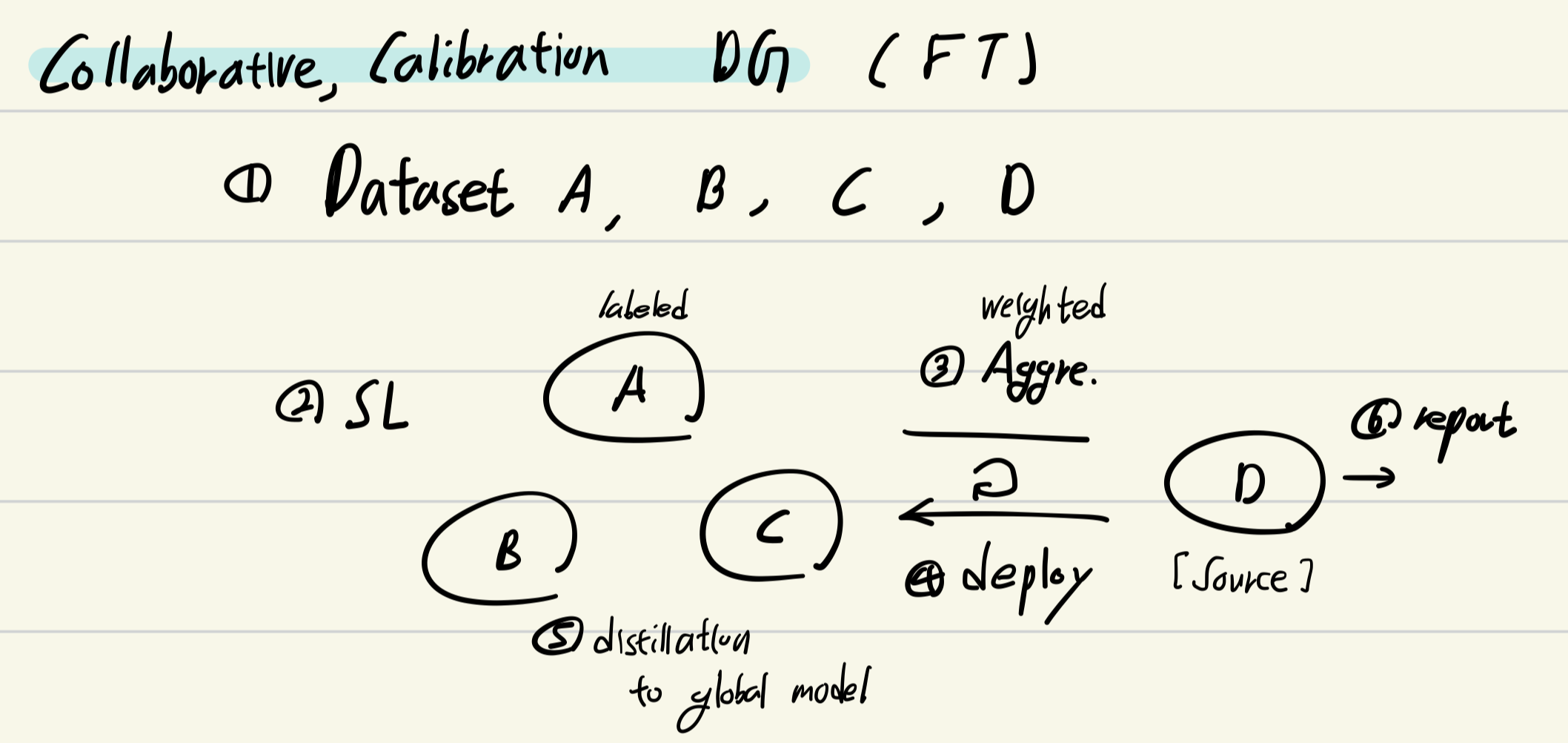

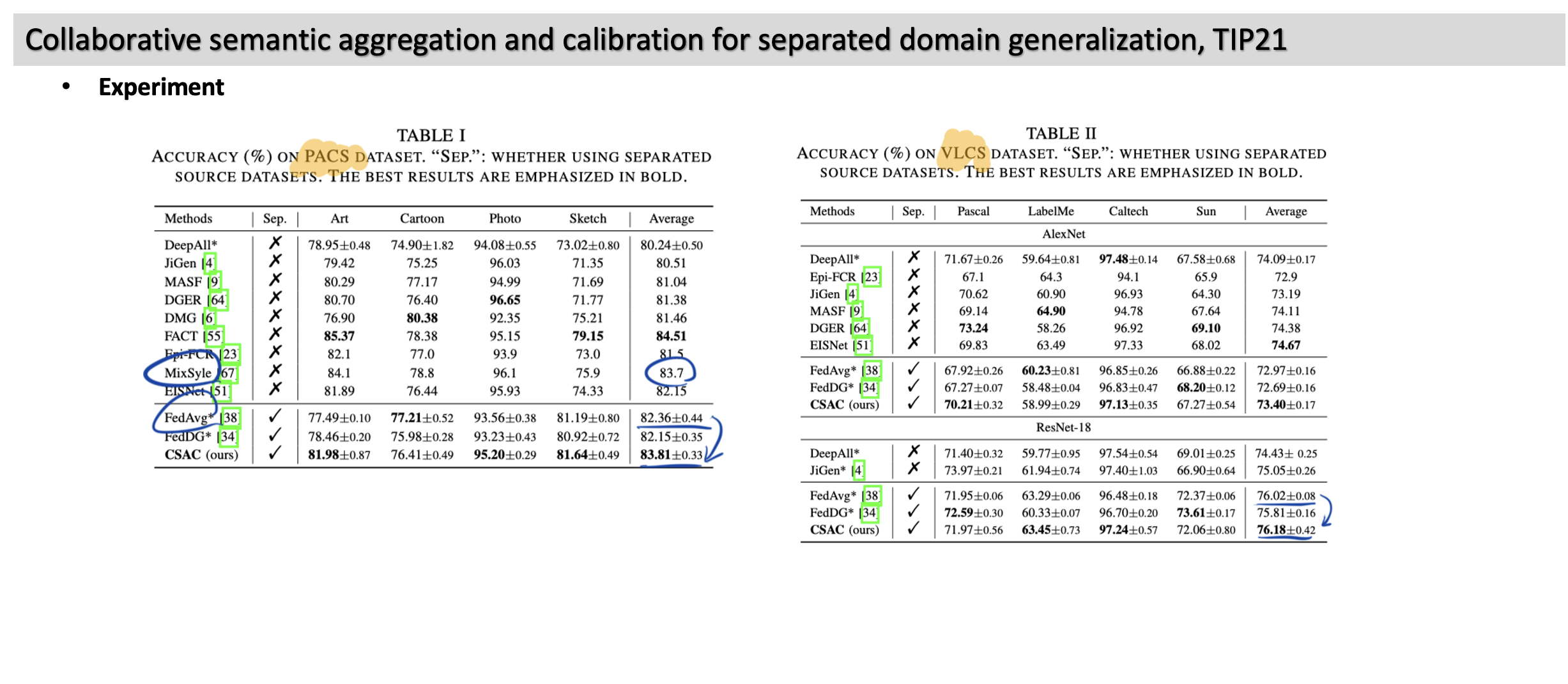

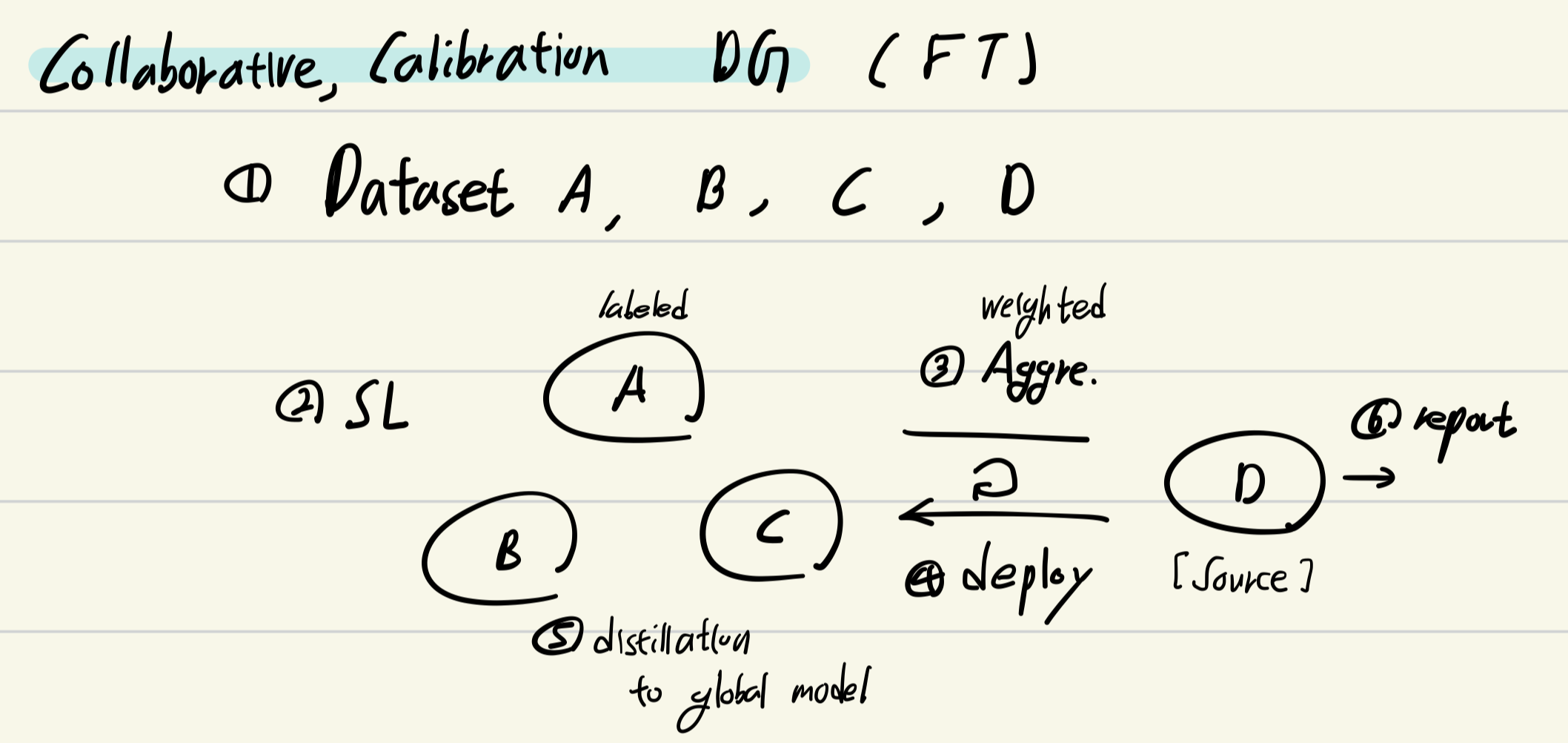

4. Collaborative semantic aggregation and calibration for separated domain generalization, TIP21

- Overview: [Global, locals SL] (T) federated DG (M) layer-by-layer weighted aggregation and, layer-wise weighted distillation

- Introduction

- Tremendous data is stored locally in distributed places nowadays, which may contain private information, e.g., patient data from hospitals and video recordings from surveillance cameras.

- Method

- [Build fused model] Weighted aggregation layer-by-layer

- [Train fused model] Layer-wise feature alignment (weighted distillation)

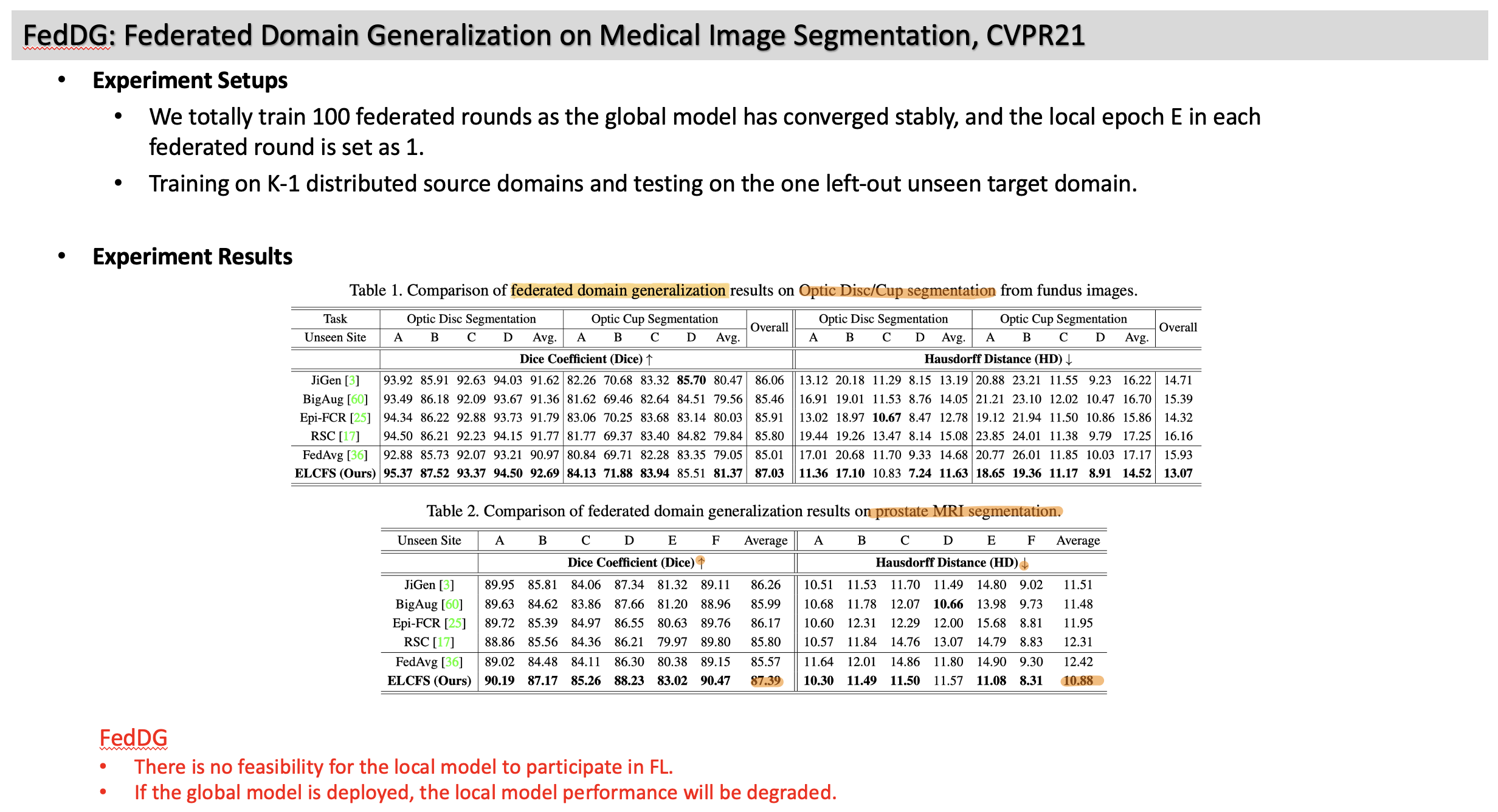

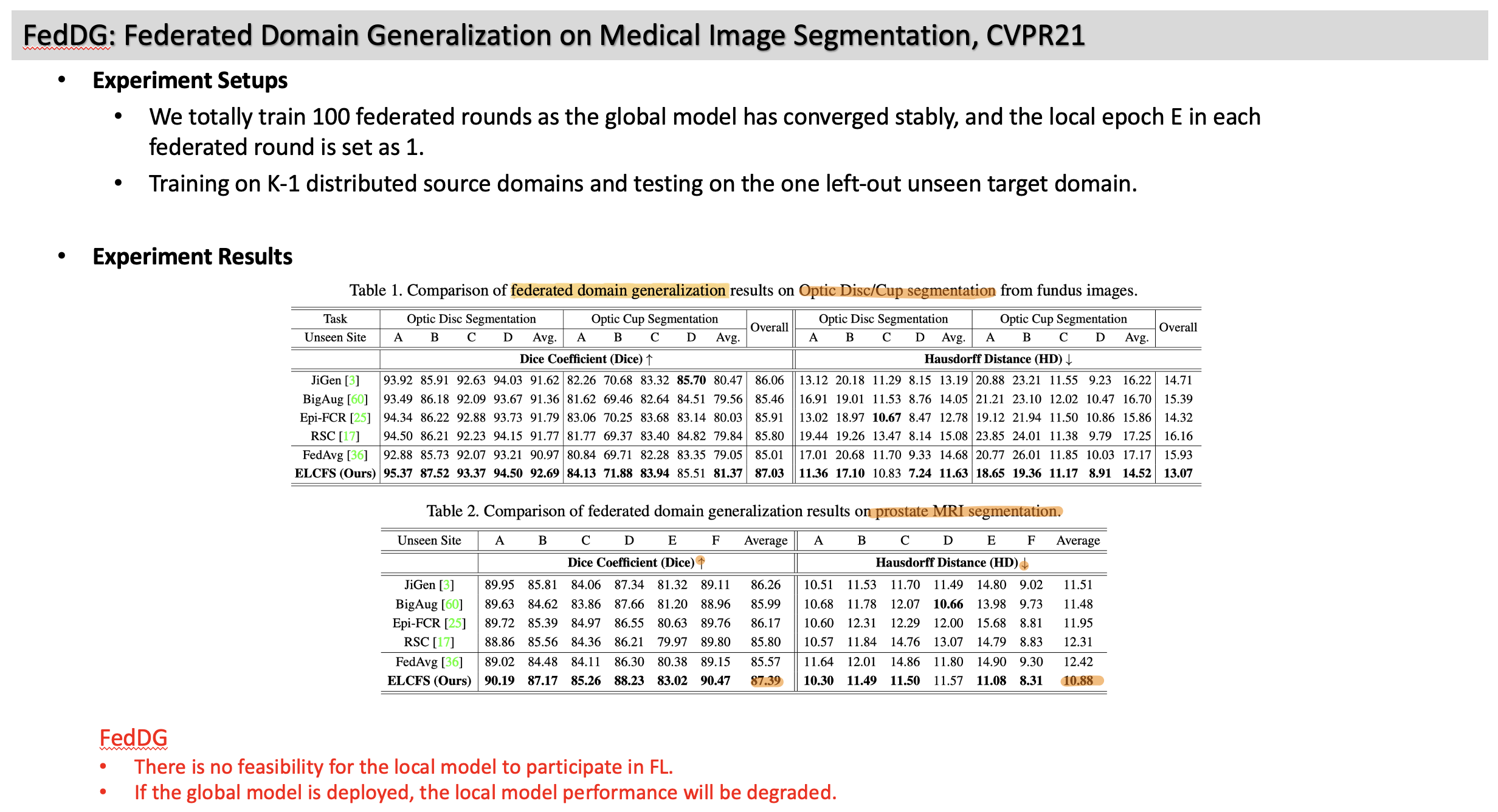

5. FedDG: Federated Domain Generalization on Medical Image Segmentation, CVPR21

- Overview: [Global, locals SL] (T) federated DG (M) style-interpolation using amplitude from Fourier transformation and pixel-wise contrastive learning for segmentation

- Introduction

- All existing FL works only focus on improving model performance on the internal clients while neglecting model generalizability onto unseen domains outside the federation.

- The learning at each client can only access its local data. Therefore, current DG methods are typically not applicable in the FedDG scenario.

- Method

- Training local clients with DG algorithms

- Image Fourier transformation → amplitude (style) and phase spectrum (semantic info) → Interpolation amplitude spectrum to generate unseen style image.

- (meta-learning-based) boundary-oriented contrastive learning