[Effi.] Efficient vision transformer 1

Efficient_ViT1

1. ToMe: Token Merging Your ViT But Faster_ICLR23

- Method

- Our method works with or without training.

- By merging redundant tokens, we hope to increase throughput.

- We merge tokens to reduce by r per layer. (100 > 95 > 90 > 85 …). ToMo merges with r = 8 which gradually removes 98% of tokens over the 24 layers of the network. Moreover, we also define a “decreasing” schedule which is faster because more are removed early.

- We apply our token merging step between the attention and MLP.

- We use a dot product similarity metric (e.g., cosine similarity).

- We propose a more efficient solution, so we focus on matching and not clustering.

- Proportional Attention: Equ. (1). This performs the same operation as if you’d have s copies of the key.

- Results

- On average, they achieve 2× the throughput with competitive performance, compared with SOTA models.

- Tons of ablation studies and experiment results prove the effectiveness of their approach.

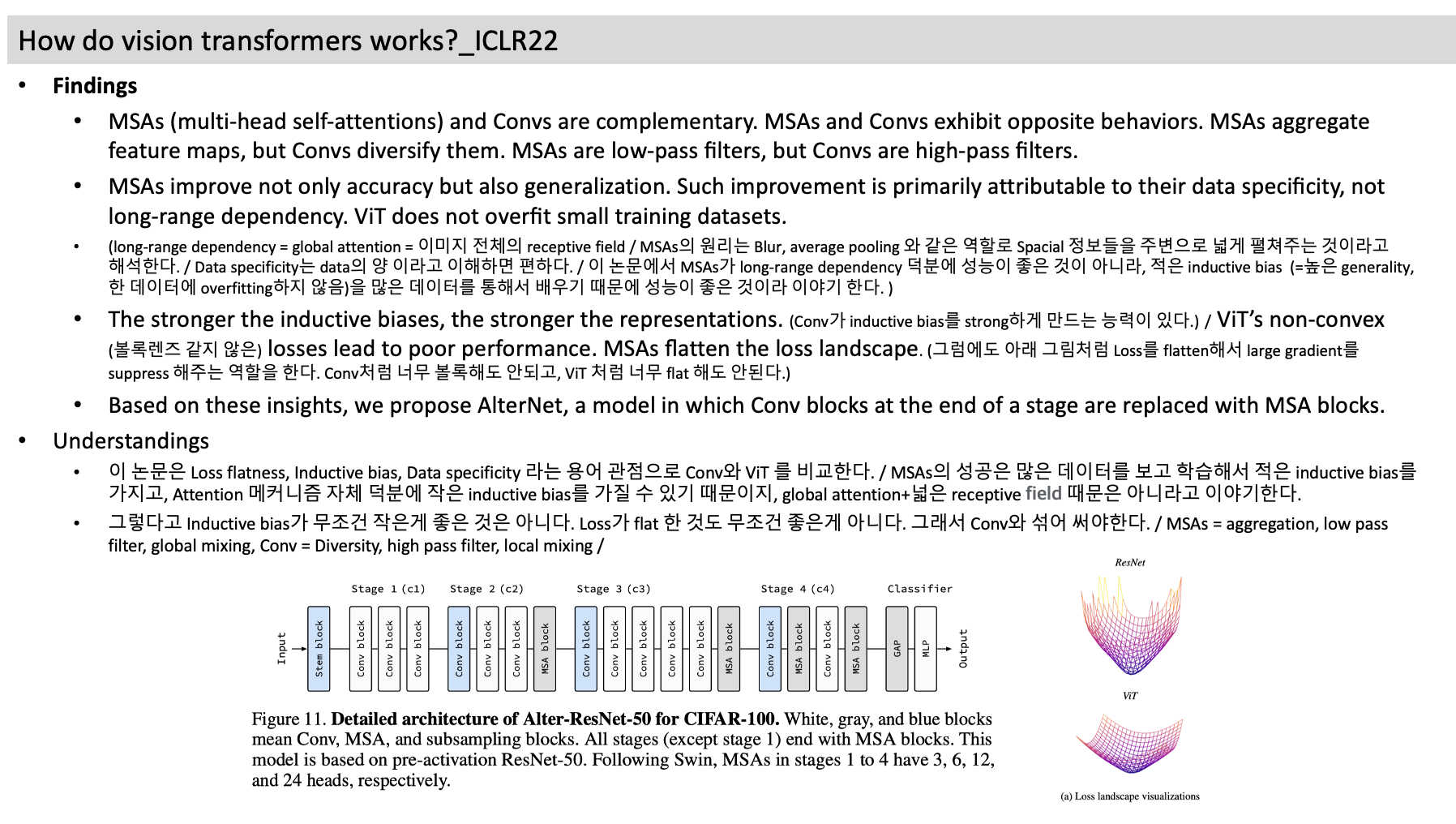

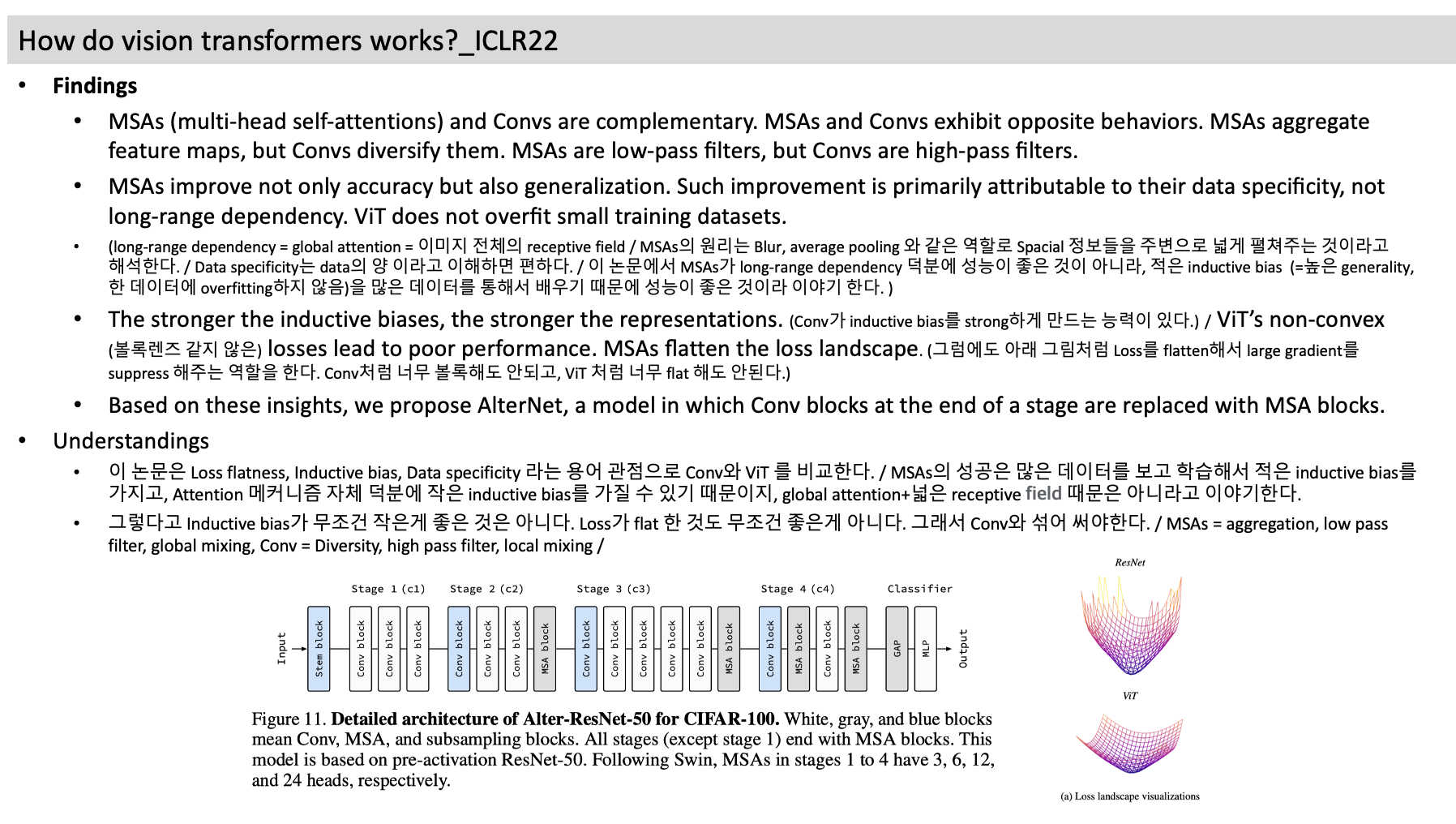

- Findings

- MSAs (multi-head self-attentions) and Convs are complementary. MSAs and Convs exhibit opposite behaviors. MSAs aggregate feature maps, but Convs diversify them. MSAs are low-pass filters, but Convs are high-pass filters.

- MSAs improve not only accuracy but also generalization. Such improvement is primarily attributable to their data specificity, not long-range dependency. ViT does not overfit small training datasets.

- (long-range dependency = global attention = 이미지 전체의 receptive field / MSAs의 원리는 Blur, average pooling 와 같은 역할로 Spacial 정보들을 주변으로 넓게 펼쳐주는 것이라고 해석한다. / Data specificity는 data의 양 이라고 이해하면 편하다. / 이 논문에서 MSAs가 long-range dependency 덕분에 성능이 좋은 것이 아니라, 적은 inductive bias (=높은 generality, 한 데이터에 overfitting하지 않음)을 많은 데이터를 통해서 배우기 때문에 성능이 좋은 것이라 이야기 한다. )

- The stronger the inductive biases, the stronger the representations. (Conv가 inductive bias를 strong하게 만드는 능력이 있다.) / ViT’s non-convex (볼록렌즈 같지 않은) losses lead to poor performance. MSAs flatten the loss landscape. (그럼에도 아래 그림처럼 Loss를 flatten해서 large gradient를 suppress 해주는 역할을 한다. Conv처럼 너무 볼록해도 안되고, ViT 처럼 너무 flat 해도 안된다.)

- Based on these insights, we propose AlterNet, a model in which Conv blocks at the end of a stage are replaced with MSA blocks.

- Understandings

- 이 논문은 Loss flatness, Inductive bias, Data specificity 라는 용어 관점으로 Conv와 ViT 를 비교한다. / MSAs의 성공은 많은 데이터를 보고 학습해서 적은 inductive bias를 가지고, Attention 메커니즘 자체 덕분에 작은 inductive bias를 가질 수 있기 때문이지, global attention+넓은 receptive field 때문은 아니라고 이야기한다.

- 그렇다고 Inductive bias가 무조건 작은게 좋은 것은 아니다. Loss가 flat 한 것도 무조건 좋은게 아니다. 그래서 Conv와 섞어 써야한다. / MSAs = aggregation, low pass filter, global mixing, Conv = Diversity, high pass filter, local mixing

3. Patches Are All You Need?_TMLR23

- Summary

- Key of ViT’s success = Patchfy + (Channel & Spatial wise mixing) x alternating steps => ConvMixer

- Patches are sufficient for designing simple and effective vision models. (여기서 말하는 patch는 conv를 통과시켜 나온 h x n/p x n/p feature 의 dimension (h) and resolution (n/p) 를 계속 유지하는 것을 말한다.)

- Introduction

- Many works replace self-attention with novel operations and making other small changes. The common template of them is patch (maintaining equal size and resolution),isotropic (global attention), and alternating steps of spatial and channel mixing.

- Finding: The strong performance may result more from this patch-based representation, than using self-attention and MLPs.

- Methods

- Our architecture is based on the idea of mixing. We chose depthwise convolution to mix spatial locations and pointwise convolution to mix channel locations.

- While self-attention (spatial mixing) and MLPs (depth mixing) are theoretically more flexible, allowing for large receptive fields and content-aware behavior, the inductive bias of convolution is well-suited to vision tasks and leads to high data efficiency.

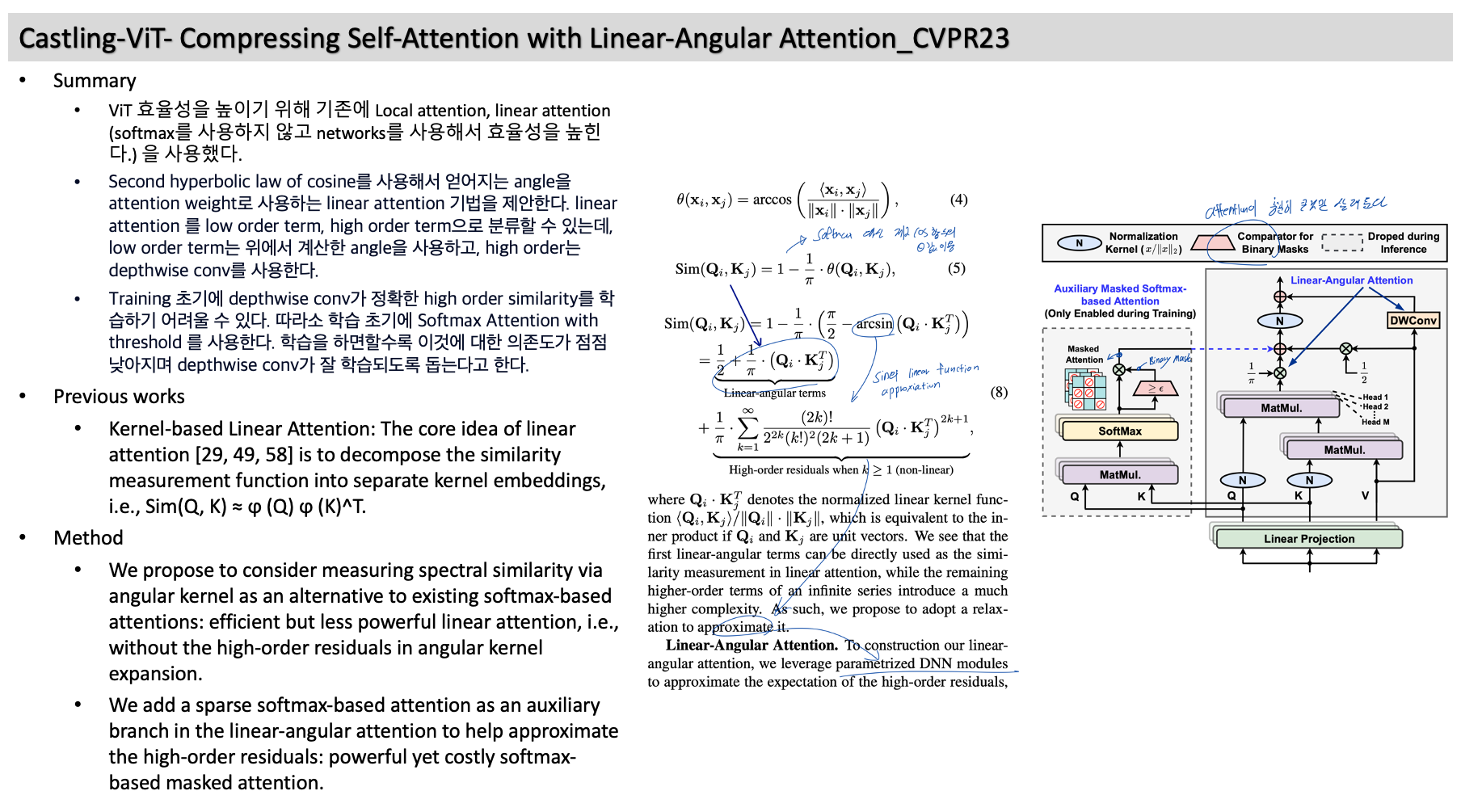

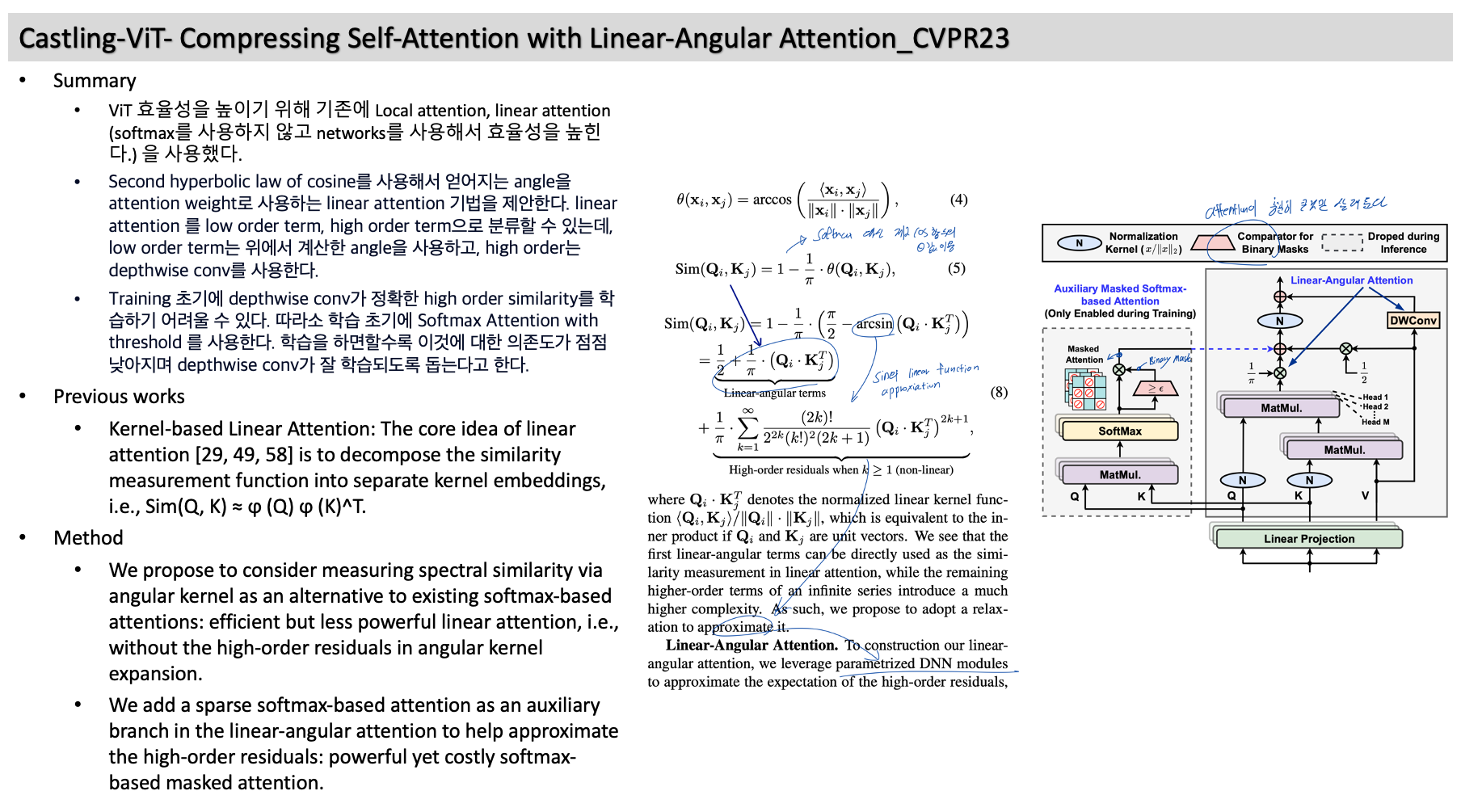

4. Castling-ViT- Compressing Self-Attention with Linear-Angular Attention_CVPR23

- Summary

- ViT 효율성을 높이기 위해 기존에 Local attention, linear attention (softmax를 사용하지 않고 networks를 사용해서 효율성을 높힌다.) 을 사용했다.

- Second hyperbolic law of cosine를 사용해서 얻어지는 angle을 attention weight로 사용하는 linear attention 기법을 제안한다. linear attention 를 low order term, high order term으로 분류할 수 있는데, low order term는 위에서 계산한 angle을 사용하고, high order는 depthwise conv를 사용한다.

- Training 초기에 depthwise conv가 정확한 high order similarity를 학습하기 어려울 수 있다. 따라소 학습 초기에 Softmax Attention with threshold 를 사용한다. 학습을 하면할수록 이것에 대한 의존도가 점점 낮아지며 depthwise conv가 잘 학습되도록 돕는다고 한다.

- Previous works

- Kernel-based Linear Attention: The core idea of linear attention [29, 49, 58] is to decompose the similarity measurement function into separate kernel embeddings, i.e., Sim(Q, K) ≈ ϕ (Q) ϕ (K)^T.

- Method

- We propose to consider measuring spectral similarity via angular kernel as an alternative to existing softmax-based attentions: efficient but less powerful linear attention, i.e., without the high-order residuals in angular kernel expansion.

- We add sparse softmax-based attention as an auxiliary branch in the linear-angular attention to help approximate the high-order residuals: powerful yet costly softmax-based masked attention.

5. DETRs Beat YOLOs on Real-time Object Detection_arXiv23

- Contributions

- We design an efficient hybrid encoder to replace the original transformer encoder. By decoupling the intra-scale interaction and cross-scale fusion of multi-scale features, the encoder can efficiently process features.

- We propose IoU-aware query selection, which provides higher-quality initial object queries to the decoder by providing IoU constraints during training.

- To verify this opinion, we rethink the encoder structure and design a range of variants with different encoders, as shown in Fig. 5.

- Summary

- ViT+Knowledge distillation / model compression 능력은 별로다. / 그럼에도 5 guidelines (findings) 논문 전개법은 마음에 든다. / PooingFormer와 성능은 비슷하지만 throughput에서 17%정도 성능 향상있다. / 모든 guideline을 다 따르면 51 -> 75.13 정도의 성능 증가를 얻는다.

- Self-attention이 가장 많은 latency를 차지하니까, 이것을 없애는 방법을 찾고 싶다. Training 단계에서: 나중에 LN에 merging할 수 있도록 MSA를 Affine-parameters로 바꾼다. 모델을 학습하는데 GT를 사용하지 않고 Teacher모델의 feature를 Distillation해서 학습하다. Test 단계에서: 학습한 Affine parameter를 LN에 mergin한다. Identity mapping이란 그냥 feature를 통과시키는 모듈을 의미한다.

- (이렇게 하면 inductive bias가 극도로 생길 것 같은데.. 이 모델이 다른 domain에서 어떻게 동작하는지 확인이 필요하다. 이런 Efficient Transformer들이 다른 domain에서 어떻게 동작하는지 확인하고 inductive bias를 ViT만큼 갖게 하면서 Efficiency를 취할 수 있는 방법을 찾는 것도 좋을 것 같다.)

- Introduction

- We aim to simultaneously maintain the efficiency and efficacy of the token mixer-free vision backbone (namely IdentityFormer) by using knowledge distillation.

- Guidelines

- Soft distillation without using ground-truth labels is more effective.

- Using affine transformation without distillation is difficult to tailor the performance degeneration.

- The proposed block-wise knowledge distillation, called module imitation, helps leveraging the modeling capacity of affine operator.

- Teacher with large receptive field is beneficial to improve receptive field limited student.

- Loading the original weight of teacher model (except the token mixer) into student improve the convergence and performance.

- Motivation

- 위 논문들 중 성능 대비 Throughput이 가장 좋다. Visualization 결과를 보면 forground 찾는 능력이 충분한지는 모르겠다.

- In this paper, we challenge this dense paradigm and present a new method, coined SparseFormer, to imitate human’s sparse visual recognition in an end-to-end manner.

- Method

- [D in Figure 2] In practice, we perform token RoI adjustment first (C), generate sparse sampling points (A), and then use bilinear interpolation to obtain sampled features. We then apply adaptive decoding (B) to these sampled features and add the decoded output back to the token embedding vector.

- The focusing Transformer finds foreground objects, and the subsequent cortex Transformer (standard Transformer encoder) classifies the features.

- SparseFormer recognizes an image in a sparse way by learning a limited number of tokens in the latent space and applying transformers to them. Each latent token t in SparseFormer with an RoI descriptor b = (x, y, w, h).